This tutorial is in progress, and it does not function on the current vm.

Welcome to the Distributed ONOS Tutorial.

In this tutorial you will learn to write a distributed ONOS application. The application you will be writing is called BYON (Bring Your Own Network). This tutorial will teach you how to implement an ONOS service, an ONOS store (both trivial and distributed) and how to use parts of the CLI and Northbound API. First, you will start with a single instance implementation which will be fully functional. After this, you will implement a distributed implementation of the service. The idea here will be for you to see how simple transitioning from a trivial single instance implementation to a distributed implementation is. Believe it or not, most of your code does not change.

Introduction

Pre-requisites

You will need a computer with at least 2GB of RAM and at least 5GB of free hard disk space. A faster processor or solid-state drive will speed up the virtual machine boot time, and a larger screen will help to manage multiple terminal windows.

The computer can run Windows, Mac OS X, or Linux – all work fine with VirtualBox, the only software requirement.

To install VirtualBox, you will need administrative access to the machine.

The tutorial instructions require prior knowledge of SDN in general, and OpenFlow and Mininet in particular. So please first complete the OpenFlow tutorial and the Mininet walkthrough. Also being familiar with Apache Karaf would be helpful although not entirely required.

Stuck? Found a bug? Questions?

Email us if you’re stuck, think you’ve found a bug, or just want to send some feedback. Please have a look at the guidelines to learn how to efficiently submit a bug report.

Setup your environment

Install required software

You will need to acquire two files: a VirtualBox installer and the Tutorial VM.

After you have downloaded VirtualBox, install it, then go to the next section to verify that the VM is working on your system.

Create Virtual Machine

Double-click on the downloaded tutorial zipfile. This will give you an OVF file. Open the OVF file, this will open virtual box with an import dialog. Make sure you provision your VM with 4GB of RAM and if possible 4 CPUs if possible, if not 2 CPUs should be ok.

Click on import. When the import is finished start the VM and login using:

USERNAME: distributed

PASSWORD: distributed

Important Command Prompt Notes

In this tutorial, commands are shown along with a command prompt to indicate the subsystem for which they are intended.

For example,

onos>

indicates that you are in the ONOS command line, whereas

mininet>

indicates that you are in mininet.

Startup multiple docker instances

We will be using docker to spawn multiple ONOS instances. So before we dive into the code, let's provision some docker instances that will run ONOS. First, you should see that there is already an ONOS distributed tutorial images present on your system:

distributed@mininet-vm:~/onos$ sudo docker images REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE onos/tutorial-dist latest 666b3c862984 13 hours ago 666.1 MB ubuntu-upstart 14.10 e2b2af39309a 7 days ago 264.2 MB

At this point you are ready to spawn your ONOS instances. To do this we will spawn three docker instances that will be detached and running in the background.

distributed@mininet-vm:~/onos$ sudo docker run -t -P -i -d --name onos-1 onos/tutorial-dist <docker-instance-id> distributed@mininet-vm:~/onos$ sudo docker run -t -P -i -d --name onos-2 onos/tutorial-dist <docker-instance-id> distributed@mininet-vm:~/onos$ sudo docker run -t -P -i -d --name onos-3 onos/tutorial-dist <docker-instance-id>

If you go the following error message:

distributed@mininet-vm:~$ sudo docker run -t -P -i -d --name onos-1 onos/tutorial-dist 2014/12/11 10:55:53 Error response from daemon: Conflict, The name onos-1 is already assigned to 26d8c84f8a50. You have to delete (or rename) that container to be able to assign onos-1 to a container again. distributed@mininet-vm:~$

which should only happen if you have already build your docker instance then you only need to start it:

distributed@mininet-vm:~$ sudo docker start onos-1

Now you should have three docker instances up and running

distributed@mininet-vm:~$ sudo docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES fc08370eb3d0 onos/tutorial-dist:latest "/sbin/init" About a minute ago Up About a minute 0.0.0.0:49168->22/tcp, 0.0.0.0:49169->6633/tcp, 0.0.0.0:49170->8181/tcp onos-3 bc725b09deed onos/tutorial-dist:latest "/sbin/init" About a minute ago Up About a minute 0.0.0.0:49165->22/tcp, 0.0.0.0:49166->6633/tcp, 0.0.0.0:49167->8181/tcp onos-2 26d8c84f8a50 onos/tutorial-dist:latest "/sbin/init" About a minute ago Up About a minute 0.0.0.0:49162->22/tcp, 0.0.0.0:49163->6633/tcp, 0.0.0.0:49164->8181/tcp onos-1 distributed@mininet-vm:~$

Ok now that we have all our docker instances up and running we simply need to set them up with ONOS. To do this we will use the standard ONOS toolset which would be the same set of commands if you were to deploy ONOS on a VM or bare metal machine.

Setting up ONOS in spawned docker instances (also known as docking the docker  )

)

First, let's start by making sure our environment is correctly setup.

distributed@mininet-vm:~$ cell docker ONOS_CELL=docker OCI=172.17.0.2 OC1=172.17.0.2 OC2=172.17.0.3 OC3=172.17.0.4 OCN=localhost ONOS_FEATURES=webconsole,onos-api,onos-core,onos-cli,onos-rest,onos-gui,onos-openflow,onos-app-fwd,onos-app-proxyarp,onos-app-mobility ONOS_USER=root ONOS_NIC=172.17.0.*

First thing we need to do is setup passwordless access to our instances, this will safe you a ton of time especially when developing and pushing your component frequently. ONOS provides a script that will push your local key to the instance:

distributed@mininet-vm:~$ onos-push-keys $OC1 root@172.17.0.5's password: onosrocks distributed@mininet-vm:~$ onos-push-keys $OC2 root@172.17.0.5's password: onosrocks distributed@mininet-vm:~$ onos-push-keys $OC3 root@172.17.0.5's password: onosrocks

The password for your instance is onosrocks. You will need to do this for each instance. Now we just need to package ONOS by running:

distributed@mininet-vm:~$ onos-package -rw-rw-r-- 1 distributed distributed 41940395 Dec 11 13:20 /tmp/onos-1.0.0.distributed.tar.gz

This prepares an ONOS installations which can now be shipped to the remote instances:

distributed@mininet-vm:~$ onos-install $OC1 onos start/running, process 308 distributed@mininet-vm:~$ onos-install $OC2 onos start/running, process 302 distributed@mininet-vm:~$ onos-install $OC3 onos start/running, process 300 distributed@mininet-vm:~$

This has now installed ONOS on your docker instances.

Verifying that ONOS is deployed

Now ONOS is installed let's quickly run some tests to make sure everything is ok. Let's start by connecting to the ONOS cli:

distributed@mininet-vm:~$ onos -w $OC1

Connection to 172.17.0.2 closed.

Logging in as karaf

Welcome to Open Network Operating System (ONOS)!

____ _ ______ ____

/ __ \/ |/ / __ \/ __/

/ /_/ / / /_/ /\ \

\____/_/|_/\____/___/

Hit '<tab>' for a list of available commands

and '[cmd] --help' for help on a specific command.

Hit '<ctrl-d>' or type 'system:shutdown' or 'logout' to shutdown ONOS.

onos>

You should drop into the cli. Now in another cli window let's start mininet.

distributed@mininet-vm:~$ ./startmn.sh mininet>

Now let's see if we have switches that are connected to ONOS:

onos> devices id=of:0000000100000001, available=true, role=MASTER, type=SWITCH, mfr=Nicira, Inc., hw=Open vSwitch, sw=2.1.3, serial=None, protocol=OF_10 id=of:0000000100000002, available=true, role=STANDBY, type=SWITCH, mfr=Nicira, Inc., hw=Open vSwitch, sw=2.1.3, serial=None, protocol=OF_10 id=of:0000000200000001, available=true, role=MASTER, type=SWITCH, mfr=Nicira, Inc., hw=Open vSwitch, sw=2.1.3, serial=None, protocol=OF_10 id=of:0000000200000002, available=true, role=STANDBY, type=SWITCH, mfr=Nicira, Inc., hw=Open vSwitch, sw=2.1.3, serial=None, protocol=OF_10 id=of:0000000300000001, available=true, role=MASTER, type=SWITCH, mfr=Nicira, Inc., hw=Open vSwitch, sw=2.1.3, serial=None, protocol=OF_10 id=of:0000000300000002, available=true, role=STANDBY, type=SWITCH, mfr=Nicira, Inc., hw=Open vSwitch, sw=2.1.3, serial=None, protocol=OF_10 id=of:0000010100000000, available=true, role=STANDBY, type=SWITCH, mfr=Nicira, Inc., hw=Open vSwitch, sw=2.1.3, serial=None, protocol=OF_10 id=of:0000010200000000, available=true, role=STANDBY, type=SWITCH, mfr=Nicira, Inc., hw=Open vSwitch, sw=2.1.3, serial=None, protocol=OF_10 id=of:0000020100000000, available=true, role=MASTER, type=SWITCH, mfr=Nicira, Inc., hw=Open vSwitch, sw=2.1.3, serial=None, protocol=OF_10 id=of:0000020200000000, available=true, role=STANDBY, type=SWITCH, mfr=Nicira, Inc., hw=Open vSwitch, sw=2.1.3, serial=None, protocol=OF_10 id=of:0000030100000000, available=true, role=STANDBY, type=SWITCH, mfr=Nicira, Inc., hw=Open vSwitch, sw=2.1.3, serial=None, protocol=OF_10 id=of:0000030200000000, available=true, role=MASTER, type=SWITCH, mfr=Nicira, Inc., hw=Open vSwitch, sw=2.1.3, serial=None, protocol=OF_10 id=of:1111000000000000, available=true, role=STANDBY, type=SWITCH, mfr=Nicira, Inc., hw=Open vSwitch, sw=2.1.3, serial=None, protocol=OF_10 id=of:2222000000000000, available=true, role=STANDBY, type=SWITCH, mfr=Nicira, Inc., hw=Open vSwitch, sw=2.1.3, serial=None, protocol=OF_10

Let's see if we can forward traffic:

mininet> pingall *** Ping: testing ping reachability h111 -> h112 h121 h122 h211 h212 h221 h222 h311 h312 h321 h322 h112 -> h111 h121 h122 h211 h212 h221 h222 h311 h312 h321 h322 h121 -> h111 h112 h122 h211 h212 h221 h222 h311 h312 h321 h322 h122 -> h111 h112 h121 h211 h212 h221 h222 h311 h312 h321 h322 h211 -> h111 h112 h121 h122 h212 h221 h222 h311 h312 h321 h322 h212 -> h111 h112 h121 h122 h211 h221 h222 h311 h312 h321 h322 h221 -> h111 h112 h121 h122 h211 h212 h222 h311 h312 h321 h322 h222 -> h111 h112 h121 h122 h211 h212 h221 h311 h312 h321 h322 h311 -> h111 h112 h121 h122 h211 h212 h221 h222 h312 h321 h322 h312 -> h111 h112 h121 h122 h211 h212 h221 h222 h311 h321 h322 h321 -> h111 h112 h121 h122 h211 h212 h221 h222 h311 h312 h322 h322 -> h111 h112 h121 h122 h211 h212 h221 h222 h311 h312 h321 *** Results: 0% dropped (132/132 received)

Finally let's see if we have switches connected to each instance of ONOS:

onos> masters 172.17.0.2: 5 devices of:0000000100000001 of:0000000200000001 of:0000000300000001 of:0000020100000000 of:0000030200000000 172.17.0.3: 2 devices of:0000000100000002 of:0000000300000002 172.17.0.4: 7 devices of:0000000200000002 of:0000010100000000 of:0000010200000000 of:0000020200000000 of:0000030100000000 of:1111000000000000 of:2222000000000000

The number of switches per ONOS instance may be different for you because mastership is simply obtained by the first controller which handshakes with the switch. If you would like to rebalance the switch-onos ratio simply run:

onos> balance-masters

And now the output of the masters command should give you something similar to this:

onos> masters 172.17.0.2: 5 devices of:0000000100000001 of:0000000200000001 of:0000000300000001 of:0000020100000000 of:0000030200000000 172.17.0.3: 4 devices of:0000000100000002 of:0000000300000002 of:0000020200000000 of:1111000000000000 172.17.0.4: 5 devices of:0000000200000002 of:0000010100000000 of:0000010200000000 of:0000030100000000 of:2222000000000000

At this point, you multi-instance ONOS deployment is functional. Let's move on to writing some code.

Building 'Bring Your Own Network'

We are now going to start building BYON. BYON is a service which allows you to spawn virtual networks in which each host is connected to every other host of that virtual network. Basically, each virtual network contains a full mesh of the hosts that make it up.

Part 1: Creating an application

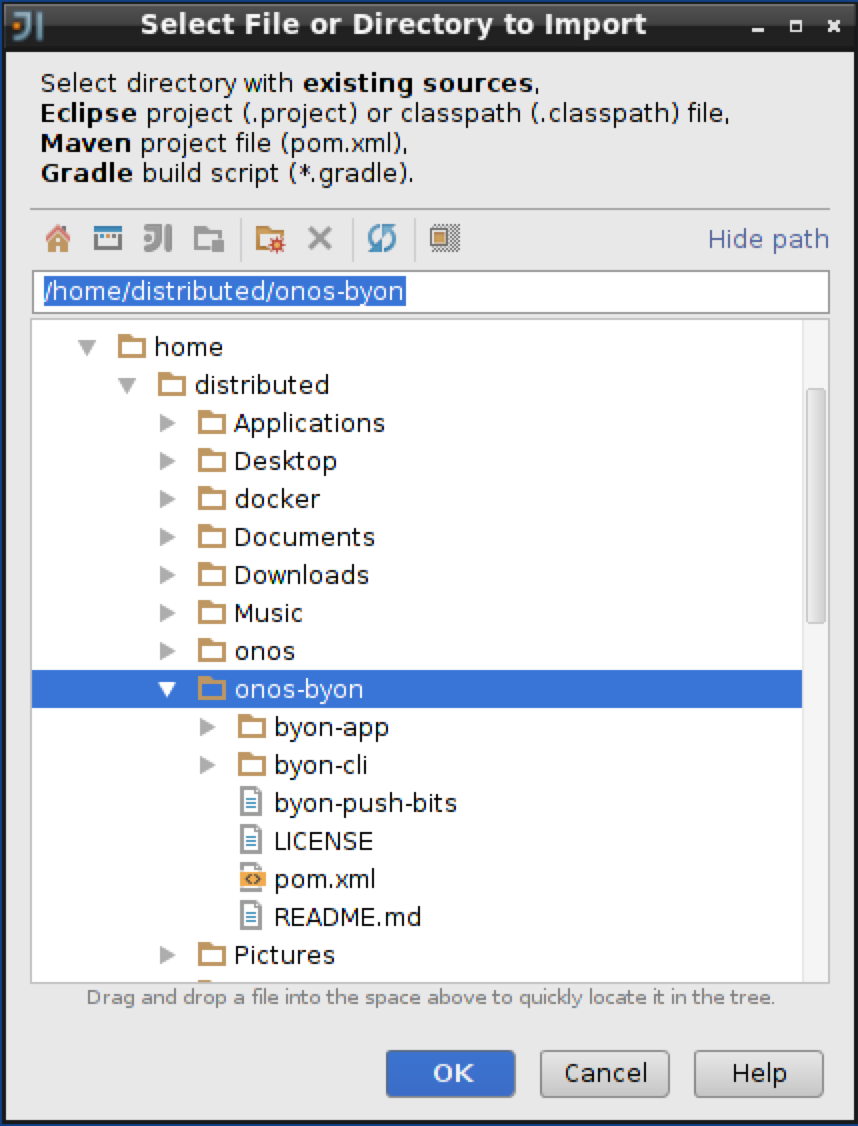

We have downloaded some starter code in the ~/byon directory. It contains a root pom.xml file for the project, as well as a initial implementation of the CLI bundle. We can start by importing the entire project into IntelliJ.

First start IntelliJ by double clicking on the IntelliJ icon on your desktop. When you get prompted with the following window.

Select "Import Project" and import the onos-byon project.

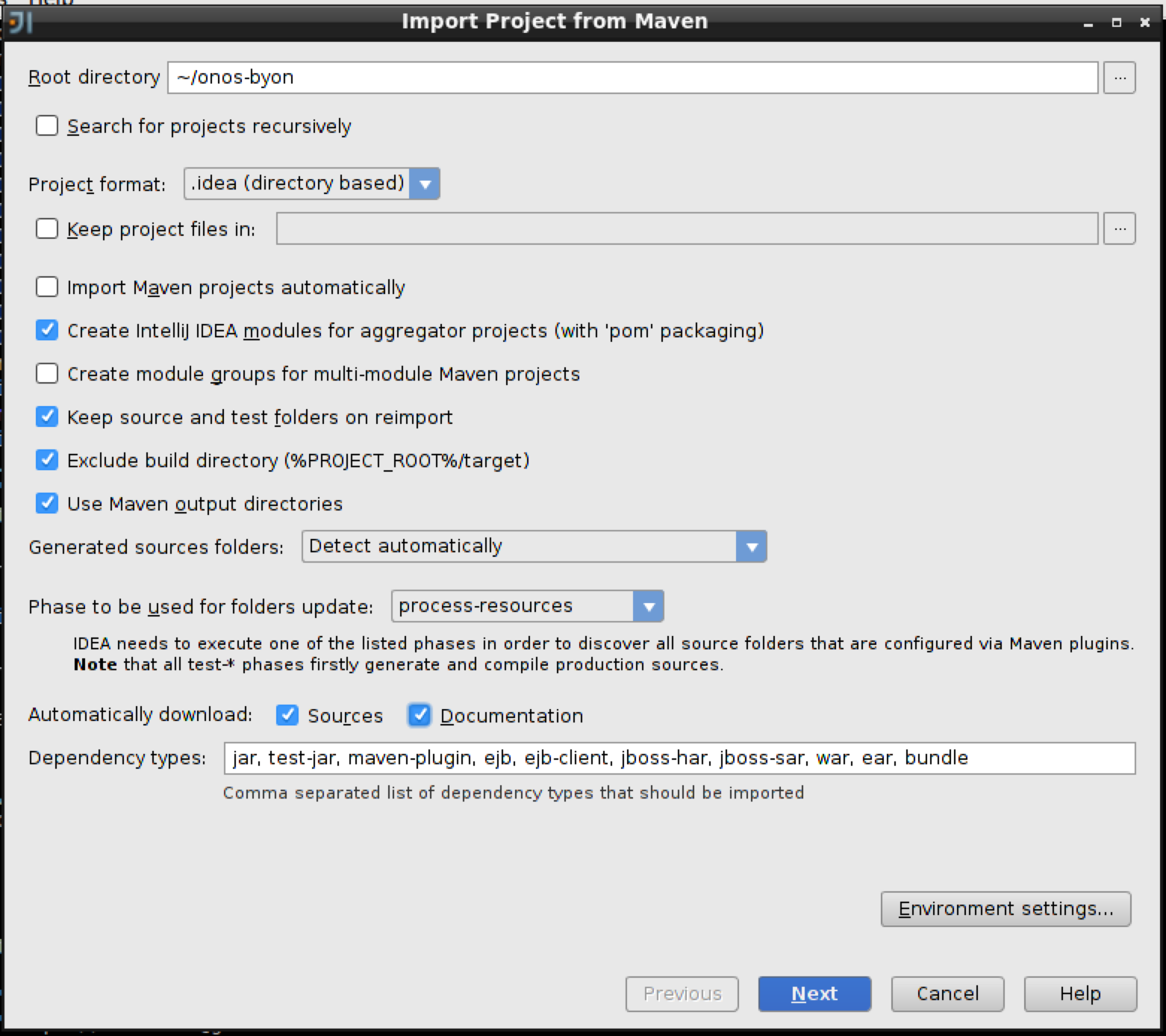

Import the project from external model, and select "Maven".

And now make sure you check "Sources" and "Documentation" in the Automatically download section:

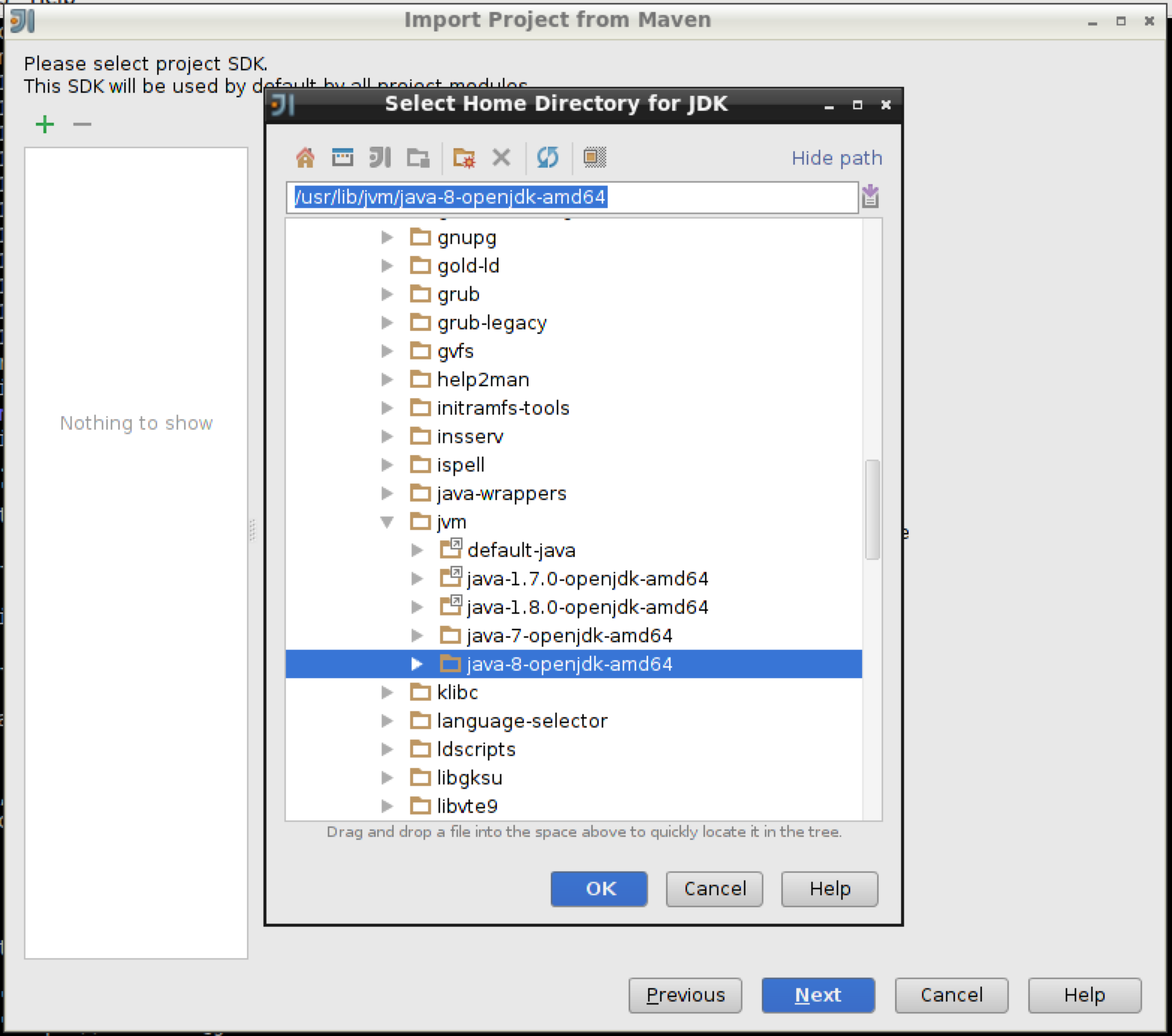

And click 'Next' and click next as well on the following window. Now, make sure you pick Java 8 in the next window by first clicking on the green '+' sign and selecting 'java-8-openjdk-amd64' and click 'ok' followed by 'Next'.

Finally click on 'Finish'.

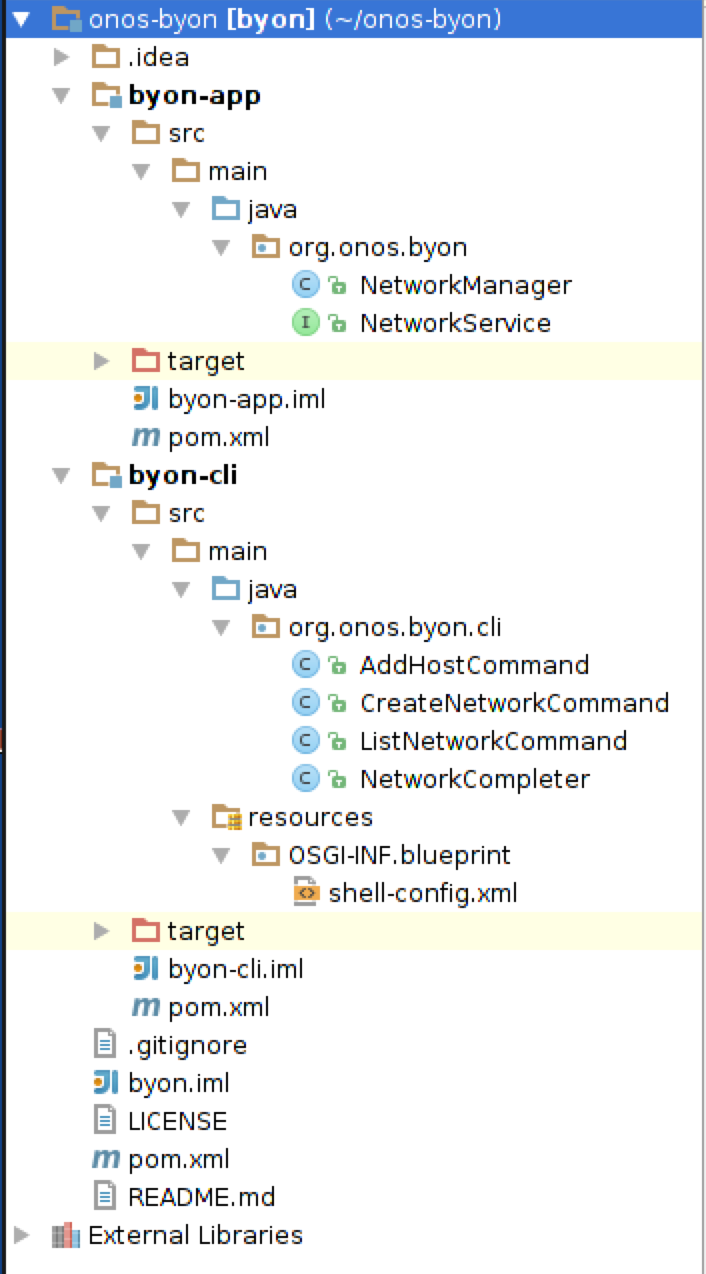

You should see two top-level packages on the left sidebar: byon-app and byon-cli as you can see below. Expand the byon-app application, and find NetworkService.java.

This file contains the interface for the NetworkService that we will be implementing. Next, open NetworkManager.java. This file is a component that doesn't export any services. Start by making NetworkManager implement NetworkService.

...

public class NetworkManager implement NetworkService {

...

We will need to implement all of the methods contained in NetworkService. For now, we can leave most of them empty. However, to be able to test the CLI, you should have implement getNetworks() return Lists.of("test"), and and implement getHosts() return Collections.emptyList().

@Override

public Set<String> getNetworks() {

return Sets.newHashSet("test");

}

and

@Override

public Set<HostId> getHosts(String network) {

return Collections.emptySet();

}

Also remember to put the @Service

Now that we have some code implemented lets try to run it, first though we must push the bundles we just coded. So let's start by building the code:

distributed@mininet-vm:~/onos-byon$ mci [INFO] Scanning for projects... ... [INFO] byon-app .......................................... SUCCESS [4.259s] [INFO] byon-cli .......................................... SUCCESS [1.643s] [INFO] byon .............................................. SUCCESS [0.104s] [INFO] ------------------------------------------------------------------------ [INFO] BUILD SUCCESS [INFO] ------------------------------------------------------------------------ [INFO] Total time: 6.719s [INFO] Finished at: Fri Dec 12 14:28:16 PST 2014 [INFO] Final Memory: 30M/303M [INFO] ------------------------------------------------------------------------ distributed@mininet-vm:~/onos-byon$

mci is an alias for maven clean install. Now that your project has successfully built your project let's push it up to the docker instances we launched earlier.

distributed@mininet-vm:~/onos-byon$ ./byon-push-bits Pushing byon to 172.17.0.2 Pushing byon to 172.17.0.3 Pushing byon to 172.17.0.4

The byon-push-bits command will take the build bundles from your local maven repository and push them into the ONOS docker instances. The command will also load and start the bundles, in fact every time you update your code you simply need to run byon-push-bits and the new bundles will be loaded and started in the remote ONOS instances.

Let's check that everything works by heading into ONOS and running a couple commands:

distributed@mininet-vm:~/onos-byon$ onos -w

Connection to 172.17.0.2 closed.

Logging in as karaf

Welcome to Open Network Operating System (ONOS)!

____ _ ______ ____

/ __ \/ |/ / __ \/ __/

/ /_/ / / /_/ /\ \

\____/_/|_/\____/___/

Hit '<tab>' for a list of available commands

and '[cmd] --help' for help on a specific command.

Hit '<ctrl-d>' or type 'system:shutdown' or 'logout' to shutdown ONOS.

onos> list

...

159 | Active | 80 | 1.0.0.SNAPSHOT | byon-app

160 | Active | 80 | 1.0.0.SNAPSHOT | byon-cli

onos> list-networks

test

So here we can see that ONOS have loaded the byon application and if we run the list-networks command we see one fake network that we hard coded in this section.

Part 2: Make it so, Number one

In this part we are going to implement a trivial store for out network service as well as learn how to push intents. The store will be used to store the service's network state (duh!) while the intent framework will allow us to simply connect together every host in the virtual network we create.

In order to be able to use the Intent Framework the caller must supply an Application ID. Application ID allow ONOS to identify who is consuming which resources as well as track applications. To achieve this, we have to add the following code to our NetworkManager class.

So first, we will need a reference to the CoreService, as it is the service that provides Application IDs. To do this add the follow code to the NetworkManager class.

@Reference(cardinality = ReferenceCardinality.MANDATORY_UNARY) protected CoreService coreService;

This now gives you a reference to the CoreService at runtime. So let's pick up an application Id.

private ApplicationId appId;

// in activate method

appId = coreService.registerApplication("org.onos.byon");

Make sure to store the Application Id in a class field.

The NetworkManager is going to have to use the store (that we are going to build) to store information therefore we are going to need a reference on a NetworkStore:

@Reference(cardinality = ReferenceCardinality.MANDATORY_UNARY) protected NetworkStore store;

Notice that at this point IntelliJ is not happy because the NetworkStore class does not exist. Well, let's crerate it!

public interface NetworkStore {

/**

* Create a named network.

*

* @param network network name

*/

void putNetwork(String network);

/**

* Removes a named network.

*

* @param network network name

*/

void removeNetwork(String network);

/**

* Returns a set of network names.

*

* @return a set of network names

*/

Set<String> getNetworks();

/**

* Adds a host to the given network.

*

* @param network network name

* @param hostId host id

* @return updated set of hosts in the network (or an empty set if the host

* has already been added to the network)

*/

Set<HostId> addHost(String network, HostId hostId);

/**

* Removes a host from the given network.

*

* @param network network name

* @param hostId host id

*/

void removeHost(String network, HostId hostId);

/**

* Returns all the hosts in a network.

*

* @param network network name

* @return set of host ids

*/

Set<HostId> getHosts(String network);

/**

* Adds a set of intents to a network

*

* @param network network name

* @param intents set of intents

*/

void addIntents(String network, Set<Intent> intents);

/**

* Returns a set of intents given a network and a host.

*

* @param network network name

* @param hostId host id

* @return set of intents

*/

Set<Intent> removeIntents(String network, HostId hostId);

/**

* Returns a set of intents given a network.

* @param network network name

* @return set of intents

*/

Set<Intent> removeIntents(String network);

}

Alright so now you have an interface for the NetworkStore, that makes IntelliJ happy. But someone should implement that interface right? Let's create a new class which implements the NetworkStore interface.

@Component(immediate = true, enabled = true)

@Service

public class SimpleNetworkStore

implements NetworkStore {

private static Logger log = LoggerFactory.getLogger(SimpleNetworkStore.class);

private final Map<String, Set<HostId>> networks = Maps.newHashMap();

private final Map<String, Set<Intent>> intentsPerNet = Maps.newHashMap();

@Activate

protected void activate() {

log.info("Started");

}

@Deactivate

protected void deactivate() {

log.info("Stopped");

}

}

Now as an exercise you must implement the methods of SimpleNetworkStore. Don't hesitate to ask questions here!

Add some Intents

Now that we have a simple store implementation, let's have byon program the network when hosts are added. For this we are going to need the intent framework, so let's grab a reference to it in the network manager.

@Reference(cardinality = ReferenceCardinality.MANDATORY_UNARY) protected IntentService intentService;

And we will need the following code to implement the mesh of the hosts in each virtual network.

private Set<Intent> addToMesh(HostId src, Set<HostId> existing) {

if (existing.isEmpty()) {

return Collections.emptySet();

}

IntentOperations.Builder builder = IntentOperations.builder(appId);

existing.forEach(dst -> {

if (!src.equals(dst)) {

builder.addSubmitOperation(new HostToHostIntent(appId, src, dst));

}

});

IntentOperations ops = builder.build();

intentService.execute(ops);

return ops.operations().stream().map(IntentOperation::intent)

.collect(Collectors.toSet());

}

private void removeFromMesh(Set<Intent> intents) {

IntentOperations.Builder builder = IntentOperations.builder(appId);

intents.forEach(intent -> builder.addWithdrawOperation(intent.id()));

intentService.execute(builder.build());

}

Verify that everything works

So make sure you compile byon again with mci and run byon-push-bits again to get your latest bundles loaded into the ONOS docker instances.

Now at an ONOS shell, play around with byon. and you should be able to forward traffic in mininet.

onos> list-networks

onos> create-network test

Created network test

onos> add-host

add-host add-host-intent

onos> add-host test 00:00:00:00:00:01/-1

Added host 00:00:00:00:00:01/-1 to test

onos> add-host

add-host add-host-intent

onos> add-host test 00:00:00:00:00:02/-1 #fixme

Added host 00:00:00:00:00:02/-1 to test

onos> list-networks

test

00:00:00:00:00:01/-1

00:00:00:00:00:02/-1

onos> intents

id=0x0, state=INSTALLED, type=HostToHostIntent, appId=org.onos.byon

constraints=[LinkTypeConstraint{inclusive=false, types=[OPTICAL]}]

Now check in mininet that you can actually communicated between the two hosts that you added to your virtual network.

mininet> h1 ping h2 PING 10.0.0.2 (10.0.0.2) 56(84) bytes of data. 64 bytes from 10.0.0.2: icmp_seq=1 ttl=64 time=21.4 ms 64 bytes from 10.0.0.2: icmp_seq=2 ttl=64 time=0.716 ms 64 bytes from 10.0.0.2: icmp_seq=3 ttl=64 time=0.073 ms

Part 3: Flesh Out the CLI

Ok so the CLI allows you to add networks and hosts but not remove them. In part you will learn how to create CLI commands in ONOS. Start by creating two files in the byon-cli package "RemoveHostCommand.java" and "RemoveNetworkCommand.java". These CLI commands are simple and very similar to the add CLI commands so do this now as an exercise. When you have written these commands, you will need to add the following XML to the 'shell-config.xml' under resources.

<command>

<action class="org.onos.byon.cli.RemoveHostCommand"/>

<completers>

<ref component-id="networkCompleter"/>

<ref component-id="hostIdCompleter"/>

<null/>

</completers>

</command>

<command>

<action class="org.onos.byon.cli.RemoveNetwork"/>

<completers>

<ref component-id="networkCompleter"/>

<null/>

</completers>

</command>