This experiment is to measure how ONOS as a cluster in various sizes, reacts to a topology event. The type of topology events tested include: 1) a switch connects/disconnects to an ONOS node; 2) a link comes up and down on an exiting topology. ONOS being a distributed system architecture, may incurs additional delay to propagate a topology event when as a multi-node cluster compared with when standalone. While the latency in standalone mode should be limited, it is also desirable to reduce any additional delays incurs in a clustered ONOS due to the need of East-West wise communication of the events.

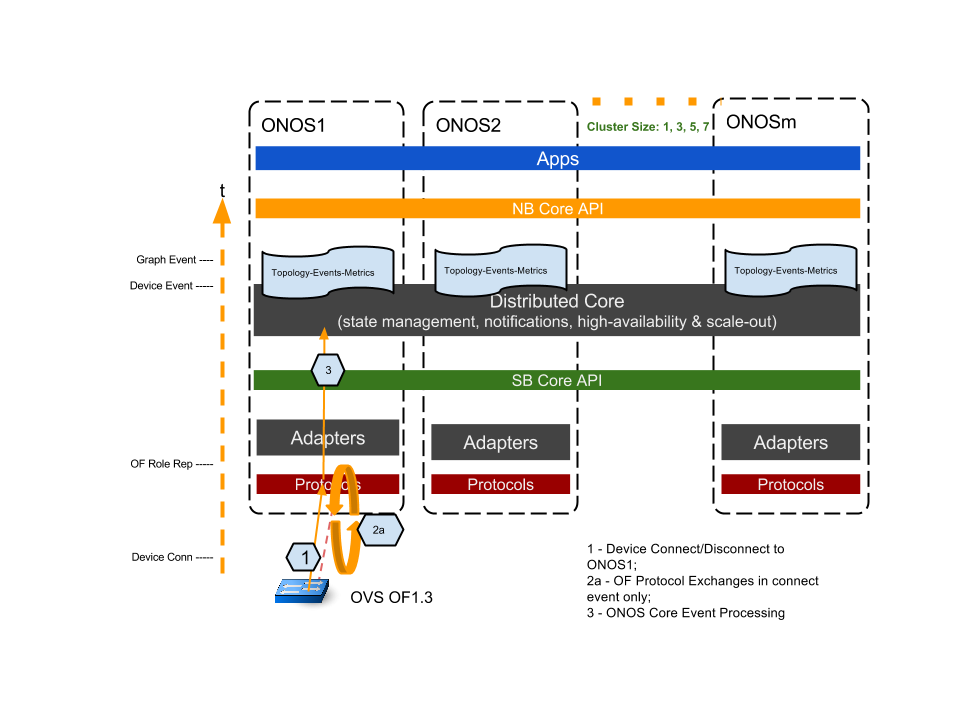

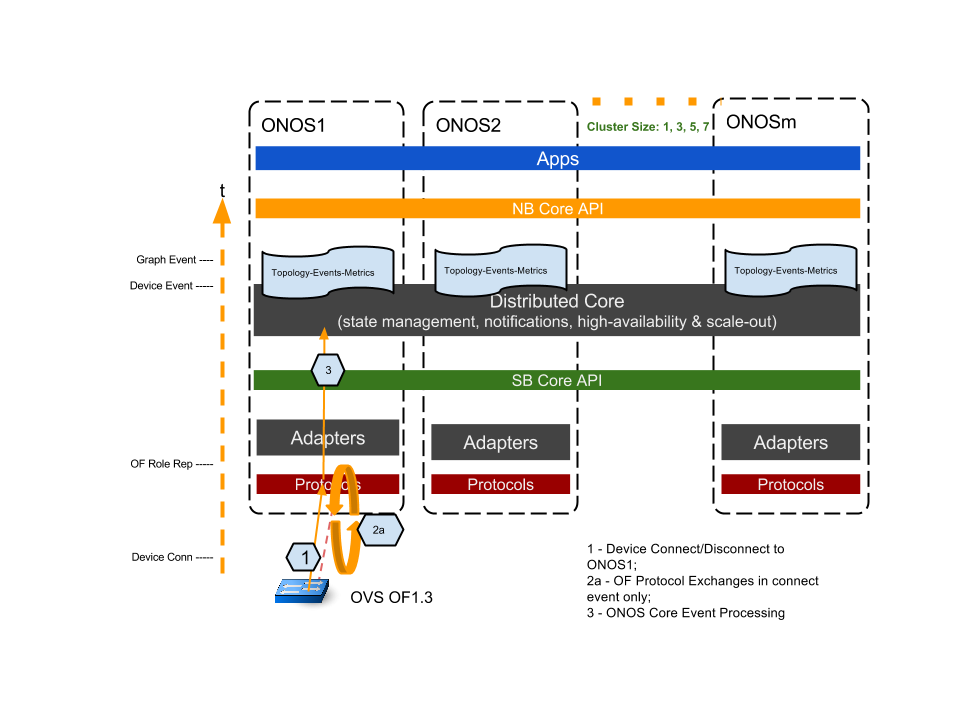

The following diagram illustrates the test setup. A switch connect event is generated from a “floating” (i.e. no data pathe port and links) OVS (OF1.3) by issuing “set-controller” command for the OVS bridge to initiate connection to ONOS1. We capture “on the wire” timestamps on the OF control network with the tshark tool, as well as ONOS Device and Graph timestamps recorded in ONOS using “topology-event-metrics” app. By collating those timestamps, we come up with a "end-to-end" timing profile of the events from initial event triggering to when ONOS registers the event in its topology.

The key timing captures are the following:

Device initiate connection t0, tcp syn/ack;

Openflow protocol exchanges:

t0 -> ONOS Features Reply;

OVS Features Reply -> ONOS Role Request; (ONOS processing of mastership election for the device happens here)

ONOS Role Request -> OVS Role Reply - completes the initial OF protocol exchanges;

ONOS core processing of the event:

A Device Event is triggered upon OF protocol exchange completes;

A Topology (Graph) Event is triggered from the local node Device Event.

Likewise, for testing a switch disconnect event, we use the ovs command "del-controller" to disconnect the above switch from its ONOS node. Timestamps captured are the following:

The switch disconnect end-to-end latency is the (t1 - t0).

As we scale ONOS cluster size, we only connect and disconnect the switch on ONOS1 and record the event timestamps on all nodes. The overall latency of the cluster is the latest node in the cluster reporting the Graph Event. In our test script, we run multiple iteration (ex. 20 iterations) of a test to gather statistical results.

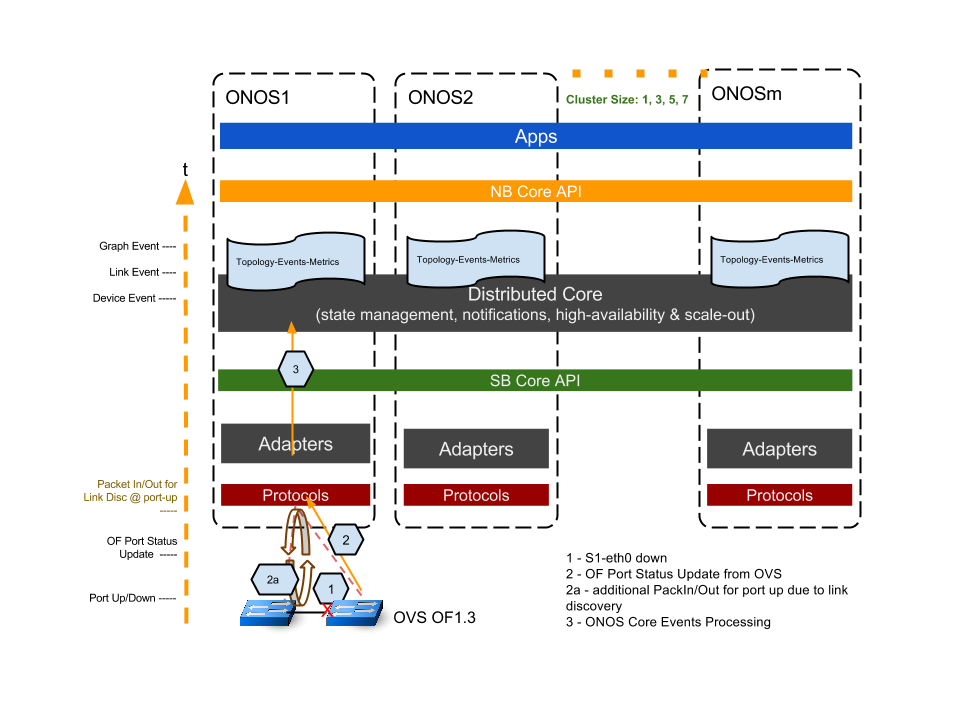

When testing for a link up/down event latency, we use a similar methodology as in switch-connect test, except that we use two OVS switches to create the link (we use mininet to create a simple linear two switch topology). Both switches' masterships belongs to ONOS1 . Referring to the diagram below. After initially establish switch-controller connections. By setting one of the switches’ interface up or down, we generate the port up or down events as the trigger for this test.

Some of the key timestamps are recorded, as described below:

Switch port brought up/down, t0;

OF PortStatus Update message sent from OVS to ONOS1;

2a. in the case of port up, ONOS reacts with link discovery events by sending link discovery message out to each OVS switch, and receiving Openflow PacketIn’s from the other OVS switch .

ONOS core processing of the events:

A Device event is generated by the OF port status message; (ONOS processing)

On link down, a Link Event is generated locally on the nodes triggered by the Device Event; on link up, a Link Event is generated upon completion of the link discovery PacketIn/out; (mostly timing due to OFP messaging and ONOS processing)

A Graph Event is generated locally on the nodes. (ONOS processing)

Similar to the switch-connect test, we consider the latest node in the cluster having registered the Graph event as the latency for the cluster.

Switch-add Event:

|

|

Link Up/Down Event:

|

|

Switch Connect/Disconnect Event:

On connecting a switch to ONOS, the notable key timing breakdowns are:

On a link down event, there is a slight increase of ONOS triggering Device event as cluster size scales.

In conclusion, the overall end-to-end timing of a switch connect event is dominated by OVS response time on a feature-request during the Openflow message exchange. Subsequently, ONOS spends approx. 9 ms to process the mastership election. Both switch connect and disconnect timing increases when ONOS in a multi-node cluster due to the needs to communicate the election among the nodes.

Link Up/Down Events:

The first observation of this test is that the link-up event take substantial longer time than a link-down event. From timing break down, we can see the the port-up event at the OVS triggers extra ONOS activities, mainly for processing link discovery. This timing also is affected by having multi-node ONOS more than standalone ONOS. Otherwise, most of the ONOS core processing time incur in the low single-digit millisecond level to register the topology event to the Graph.

In most of the network operation situations, while low overall topology event latency is desirable, switch/link down event is more crucial, because they are considered more as an adverse events to applications. Applications can reacts to those adverse events quicker when ONOS can detect them faster. We show that ONOS in this release is capable of single-digit millisecond switch/link down detection time.