Architecture design

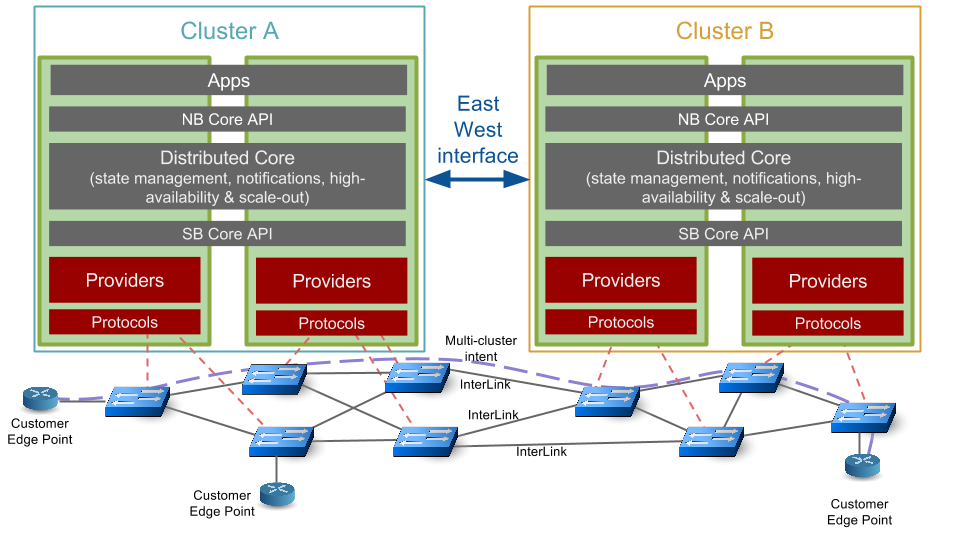

A cluster is a multi-instance ONOS deployment. Each ONOS instance, or node, shares the network state and topology with all other nodes in the same cluster. In a multi-domain scenario we expect that multiple ONOS clusters exchange network information similar to standard multi-domain protocol. The idea behind this feature project is to enable the multiple ONOS cluster to share information of their network using a East-West interface. In addition, applications can exploit such multi-cluster topology to configure routes crossing different clusters.

As shown in the following image, the topology components related to a multi-cluster scenario are:

- the links connecting two cluster called interlinks (ILs)

- the customer ports at the edge (ONOS hosts?) known as customer edge points (CEPs)

- the routes crossing multiple cluster, multi-cluster intents.

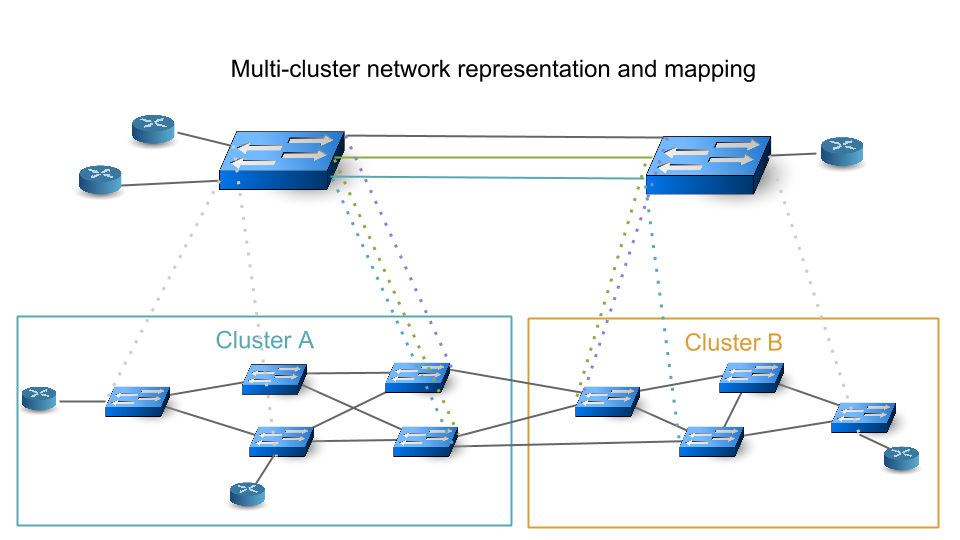

Each cluster provides an abstraction of its network (local network directly controlled) to be shared with all other clusters. For this reason we decide to create a new ONOS provider able to communicate with other clusters exposing a single big switch representing the local network. In particular, as depicted in figure below, the customer ports at the edge are exposed as CEPs, and links with other clusters as ILs. The providers also need to keep the mapping between its network elements and the exposed ones, e.g., physical port of switch and CEP, path traverse by an multi-cluster intent... The distributed architecture and how the data are stored are discussed respectively in Distributed operation for the Multi-Clusters Peering Provider and Interaction with the local store

The single big switch needs to enriched with internal metrics and a cross connection matrix. As you know, each port of the switch is mapped in a physical network device. So, we need to define a way to expose a metric to describe how each ports is connected with the others. Also we need to take into account when two ports do not have any connection each others. More detail are discussed in Internal Switch Metric and cross connection matrix.

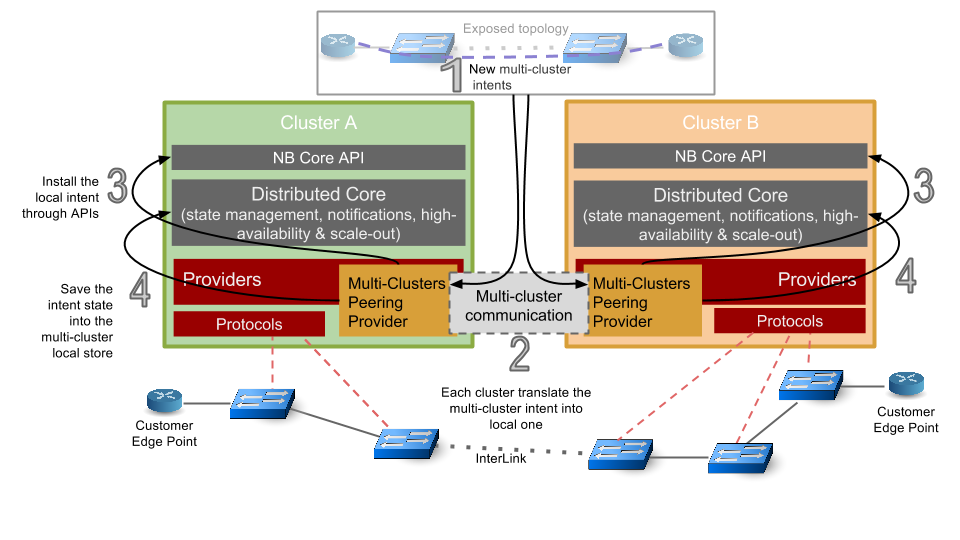

As aforementioned, the Multi-Clusters Peering Provider is able manage multi-cluster intents. The idea is to provide a traffic routing between clusters based on policy.

Workflow

Network topology

Intent

Run-time configuration

The multi-cluster domain for security reason must be configured in order to avoid unwanted connection of unknown cluster. For the same reason the InterLinks are manually configured by the administrator. Clearly the provider evaluates if the link goes down for any reasons not only monitoring the switches and ports, but also sending "External"-LLDP.

We define also the possibility to create Policy-based routing (e.g., weight of each IL, preferred path for specific classes of traffic based on L2 and L3 field...).

Open issues

Communication between clusters

The communication among clusters is critical and also depend on ONOS (see this section Distributed multi-clusters communication system)

- new ONOS store accessible by other clusters: it seems to be the best solution, but it is complex. The ONOS stores should be extended to provide inter-cluster communication. We need to manage security: for example cluster1 and cluster3 are peering, but also cluster3 and cluster4 are connected. The problem is that cluster3 is able to see all other clusters, but cluster1 and 4 instead access only a portion of the multi-cluster topology.

- Java messaging system (e.g., Hazelcast): the communication is provided by an external system (e.g., publish-subscribe mechanism) and each cluster saves the useful information inside the local store.

- extension of ONOS messaging system to permit inter-cluster communication: similar to point 2, but using an extension of the ONOS messaging system.

Distributed operation for the Multi-Clusters Peering Provider (to be discussed)

Considering the multi-clusters scenario, we have two type of distribution: one among the local cluster and the other between the clusters.

At local level, Multi-Clusters Peering providers keep the mapping between the local network topology and the exposed one. Also they need to install intents (or flows) for multi-cluster intents. For communication, each cluster informs and keeps update other clusters of its exposed topology. Furthermore, it takes care of process multi-cluster intent requests.

In both cases, the orchestration could consider a master slave approach or a complete distributed system.

For the simpler master-slave case, a master provider is elected for each cluster using the org.onosproject.cluster.LeadershipService. The master provider is the one that collects the local network topology from the ONOS store, creates the mapping and exposes the network topology to other clusters. Also it gets the topology exposed by other clusters from the distributed multi-cluster communication system. This provider takes care of the communication between clusters managing all multi-cluster intent requests. The slave providers are active, but are not able to expose or communicate with other clusters. The failure of an ONOS instance is managed by electing a new master.

In the distributed scenario, each Multi-Clusters Peering provider takes care of the portion of the network directly controller by ONOS instance where it belongs. The provider exposes (if not already done by other providers in the same cluster) the single big switch and takes it updated (e.g., new CEPs, new ILs...). Also it saves the mapping between the exposed topology and the local one (the one managed directly by that ONOS instance) in the cluster distributed store.

How is load balancing done between various instances of ONOSs? Do service requests only go to the Master instances? (TBD)

Interaction with the local store

Multi-Clusters Peering Provider needs to store some information in the local store of each cluster (e.g, ports mapping, multi-cluster intent...). We could create new MultiClusterStore using org.onosproject.store.Store.

High availability for Multi-Cluster environment

Failure recovery for multi-cluster intent (TBD)

Failure on the multi-cluster communication system (TBD)

In case the communication channel goes down we may have a split brain scenario where both clusters are active but can’t share information between them. How does the system recover from there?

ONOS Dependencies

Distributed multi-clusters communication system (Madan)

We need a communication mechanism to share topology information between clusters. Some ideas are summarized Communication between clusters.

Internal Switch Metric and cross connection matrix (Thomas)

The single big switch exposed by the Multi-cluster Peering Provider is a representation of bigger network (e.g., multiple devices, ports and path). Our requirements are:

- expose metric between all the ports of the switch: Multi-cluster Peering Provider exposes the connection metric between each port of the switch based on the underlying network topology. The metric could consider the sum of delays and/or the minimum link bandwidth of the best path needed to connect the ports

- highlight which ports are not interconnected (it does not exist any path in the underlying network topology to connect those two ports).

GUI extension

In the ONOS graphical interface, the user should be able to see the multi-cluster topology. Also the multi-cluster intents should be visible.