This page describes the ODTN Phase1.0 demonstration details which is done at NTT Communication's lab environment, and what is achieved by ODTN Phase1.0 development.

Scope

- Point to point network

- Directly connected transponders, or OLS configured out-of-band

- TAPI NB, OpenConfig SB

- No optical configuration (Configure only port enable/disable and frame mapping)

Available Devices

- NEC

- Only enable/disable client port and line port

- Infinera XT3300

- Setup logical-channel-assignment which associate client port and line channel

- Now support static frame mapping only, but with some additional work we can support dynamic frame mapping using optical channel resource management feature

- Configuration used in this demo is described here: https://docs.google.com/presentation/d/1RJkPq_6Yt6aS8txHNwZbKjAx3MK2SDhkoRoctj9_oNY/edit#slide=id.g3873fc6400_0_944

- Cassini

- The same as Infinera XT3300

Available models

- OpenConfig model

- Each vendor support different OpenConfig model, please check detailed version from here: https://wiki.onosproject.org/display/ODTN/Available+OpenConfig+models+and+versions+for+phase+1

- TAPI model

- v2.0.2

Available TAPI NBI endpoints

- tapi-common

- get-service-interface-point-details

- get-service-interface-point-list *

- tapi-connectivity-service

- create-connectivity-service

- delete-connectivity-service

- get-connectivity-service-details

- get-connectivity-service-list *

* Not working correctly and only one entity will be returned even if there are more than two entities. This limitation is caused by the issue that onos-yang-tool cannot deal with list without keys.

This will be fixed in the near future, please check: https://groups.google.com/a/opennetworking.org/forum/#!topic/odtn-sw/ls-p9JkKnuo

Demo Setup and Scenario for Infinera XT3300

Installation

Install and setup onos as a single-instance.

Setup pair of above available transponders or NETCONF emulators

- Infinera XT3300 - You can get dockerized emulator from here (ODTN internal use only):

https://drive.google.com/drive/folders/1Md2zGKBKuIMnhTlYhiVSVhIeU-1fGqK6 - Cassini - You can download the docker image via "docker pull onosproject/oc-cassini:0.2" or build your own local image from https://github.com/opennetworkinglab/ODTN-emulator/tree/master/emulator-oc-cassini.

Demo

Setup onos

# Append odtn-service to variable $ONOS_APPS

$export ONOS_APPS=odtn-service,$ONOS_APPS

$ ok clean

...

$ onos localhost

Welcome to Open Network Operating System (ONOS)!

____ _ ______ ____

/ __ \/ |/ / __ \/ __/

/ /_/ / / /_/ /\ \

\____/_/|_/\____/___/

Documentation: wiki.onosproject.org

Tutorials: tutorials.onosproject.org

Mailing lists: lists.onosproject.org

Come help out! Find out how at: contribute.onosproject.org

Hit '<tab>' for a list of available commands

and '[cmd] --help' for help on a specific command.

Hit '<ctrl-d>' or type 'system:shutdown' or 'logout' to shutdown ONOS.

onos> apps -a -s

* 8 org.onosproject.yang 2.1.0.SNAPSHOT YANG Compiler and Runtime

* 9 org.onosproject.config 2.1.0.SNAPSHOT Dynamic Configuration

* 10 org.onosproject.configsync 2.1.0.SNAPSHOT Dynamic Configuration Synchronizer

* 11 org.onosproject.faultmanagement 2.1.0.SNAPSHOT Fault Management

* 12 org.onosproject.netconf 2.1.0.SNAPSHOT NETCONF Provider

* 13 org.onosproject.configsync-netconf 2.1.0.SNAPSHOT Dynamic Configuration Synchronizer for NETCONF

* 21 org.onosproject.restsb 2.1.0.SNAPSHOT REST Provider

* 27 org.onosproject.drivers 2.1.0.SNAPSHOT Default Drivers

* 34 org.onosproject.restconf 2.1.0.SNAPSHOT RESTCONF Application Module

* 39 org.onosproject.optical-model 2.1.0.SNAPSHOT Optical Network Model

* 40 org.onosproject.drivers.optical 2.1.0.SNAPSHOT Basic Optical Drivers

* 42 org.onosproject.proxyarp 2.1.0.SNAPSHOT Proxy ARP/NDP

* 44 org.onosproject.hostprovider 2.1.0.SNAPSHOT Host Location Provider

* 45 org.onosproject.lldpprovider 2.1.0.SNAPSHOT LLDP Link Provider

* 46 org.onosproject.openflow-base 2.1.0.SNAPSHOT OpenFlow Base Provider

* 47 org.onosproject.openflow 2.1.0.SNAPSHOT OpenFlow Provider Suite

* 57 org.onosproject.protocols.restconfserver 2.1.0.SNAPSHOT RESTCONF Server Module

* 61 org.onosproject.pathpainter 2.1.0.SNAPSHOT Path Visualization

* 88 org.onosproject.models.tapi 2.1.0.SNAPSHOT ONF Transport API YANG Models

* 89 org.onosproject.models.ietf 2.1.0.SNAPSHOT IETF YANG Models

* 90 org.onosproject.models.openconfig 2.1.0.SNAPSHOT OpenConfig YANG Models

* 91 org.onosproject.models.openconfig-infinera 2.1.0.SNAPSHOT OpenConfig Infinera XT3300 YANG Models

* 92 org.onosproject.models.openconfig-odtn 2.1.0.SNAPSHOT OpenConfig RD v0.3 YANG Models

* 93 org.onosproject.odtn-api 2.1.0.SNAPSHOT ODTN API & Utilities Application

* 94 org.onosproject.drivers.netconf 2.1.0.SNAPSHOT Generic NETCONF Drivers

* 95 org.onosproject.drivers.odtn-driver 2.1.0.SNAPSHOT ODTN Driver

* 96 org.onosproject.odtn-service 2.1.0.SNAPSHOT ODTN Service Application

* 177 org.onosproject.mobility 2.1.0.SNAPSHOT Host Mobility

Initially there are no devices/ports/links registered.

onos> devices onos> ports onos> links

In DCS store, only TAPI context and topology are initially set. We can see the TAPI model state in DCS by using the following command:

onos> odtn-show-tapi-context

XML:

<context xmlns="urn:onf:otcc:yang:tapi-common">

<connectivity-context xmlns="urn:onf:otcc:yang:tapi-connectivity"/>

<topology-context xmlns="urn:onf:otcc:yang:tapi-topology">

<topology>

<uuid>c4b7a7fb-32b9-4f11-a146-1b7df7f59839</uuid>

</topology>

</topology-context>

</context>

Because there is no device and link in ONOS topology, the TAPI context shows nothing except topology uuid.

Setup Emulator

xt-3300 emulator

To setup OpenConfig emulator, all you have to do is to download and setup emulator image(https://drive.google.com/drive/folders/1Md2zGKBKuIMnhTlYhiVSVhIeU-1fGqK6),

if it's the first time you are running the demo plea go into the dowloaded folder of the emulator(for example, "emulator-oc-xt3300") and execute

# Dockerfile contains docker's configuration, <tag> is just the docker-image name. docker build -f Dockerfile -t <tag> .

then for this run and for any consequent run of the emulator you can do

# <tag> specifies the docker-image you built just now. <host_port>:<container_port> defines port mapping between your own host and docker's container created from <tag>. In Dockerfile, "EXPOSE 830" means open port 830, so <container_port> should be 830. docker run <tag> -p <host_port>:<container_port>

or using docker-compose, you can run pair of OpenConfig emulator as follows (Suggested):

$ cat docker-compose.yml

version: '2'

services:

openconfig_xt3300_1:

build: ./emulator-oc-xt3300

ports:

- "11002:830"

openconfig_xt3300_2:

build: ./emulator-oc-xt3300

ports:

- "11003:830"

$ docker-compose up -d

Cassini emulator

The building of xt-3300 emulator is a little complex. We suggest you use Cassini emulator. All subsequent sections are based on Cassini emulators.

To run two emulators as mentioned above, you can just run the commands listed below:

docker pull onosproject/oc-cassini:0.2 docker run -it -d --name openconfig_cassini_1 -p 11002:830 onosproject/oc-cassini:0.2 docker run -it -d --name openconfig_cassini_2 -p 11003:830 onosproject/oc-cassini:0.2

Checking if the emulator works correctly

If you want to check that the docker instance works correctly, you can use netconf-console command and send "hello" request to emulator. netconf-console need to be installed, please google that.

$ netconf-console --host=127.0.0.1 --port=11002 -u root -p root --hello

<nc:hello xmlns:nc="urn:ietf:params:xml:ns:netconf:base:1.0">

<nc:capabilities>

<nc:capability>urn:ietf:params:netconf:base:1.0</nc:capability>

<nc:capability>urn:ietf:params:netconf:base:1.1</nc:capability>

<nc:capability>urn:ietf:params:netconf:capability:writable-running:1.0</nc:capability>

<nc:capability>urn:ietf:params:netconf:capability:candidate:1.0</nc:capability>

<nc:capability>urn:ietf:params:netconf:capability:rollback-on-error:1.0</nc:capability>

<nc:capability>urn:ietf:params:netconf:capability:validate:1.1</nc:capability>

<nc:capability>urn:ietf:params:netconf:capability:startup:1.0</nc:capability>

<nc:capability>urn:ietf:params:netconf:capability:url:1.0</nc:capability>

<nc:capability>urn:ietf:params:netconf:capability:xpath:1.0</nc:capability>

<nc:capability>urn:ietf:params:netconf:capability:with-defaults:1.0?basic-mode=explicit&also-supported=report-all,report-all-tagged,trim,explicit</nc:capability>

<nc:capability>urn:ietf:params:netconf:capability:notification:1.0</nc:capability>

<nc:capability>urn:ietf:params:netconf:capability:interleave:1.0</nc:capability>

<nc:capability>urn:ietf:params:xml:ns:yang:ietf-yang-metadata?module=ietf-yang-metadata&revision=2016-08-05</nc:capability>

<nc:capability>urn:ietf:params:xml:ns:yang:1?module=yang&revision=2017-02-20</nc:capability>

<nc:capability>urn:ietf:params:xml:ns:yang:ietf-inet-types?module=ietf-inet-types&revision=2013-07-15</nc:capability>

<nc:capability>urn:ietf:params:xml:ns:yang:ietf-yang-types?module=ietf-yang-types&revision=2013-07-15</nc:capability>

<nc:capability>urn:ietf:params:netconf:capability:yang-library:1.0?revision=2018-01-17&module-set-id=32</nc:capability>

<nc:capability>urn:ietf:params:xml:ns:yang:ietf-netconf-acm?module=ietf-netconf-acm&revision=2018-02-14</nc:capability>

<nc:capability>urn:ietf:params:xml:ns:netconf:base:1.0?module=ietf-netconf&revision=2011-06-01&features=writable-running,candidate,rollback-on-error,validate,startup,url,xpath</nc:capability>

<nc:capability>urn:ietf:params:xml:ns:yang:ietf-netconf-notifications?module=ietf-netconf-notifications&revision=2012-02-06</nc:capability>

<nc:capability>urn:ietf:params:xml:ns:netconf:notification:1.0?module=notifications&revision=2008-07-14</nc:capability>

<nc:capability>urn:ietf:params:xml:ns:netmod:notification?module=nc-notifications&revision=2008-07-14</nc:capability>

<nc:capability>http://example.net/turing-machine?module=turing-machine&revision=2013-12-27</nc:capability>

<nc:capability>urn:ietf:params:xml:ns:yang:ietf-netconf-with-defaults?module=ietf-netconf-with-defaults&revision=2011-06-01</nc:capability>

<nc:capability>urn:ietf:params:xml:ns:yang:ietf-netconf-monitoring?module=ietf-netconf-monitoring&revision=2010-10-04</nc:capability>

<nc:capability>urn:ietf:params:xml:ns:yang:iana-if-type?module=iana-if-type&revision=2017-01-19</nc:capability>

<nc:capability>http://openconfig.net/yang/openconfig-ext?module=openconfig-extensions&revision=2017-04-11</nc:capability>

<nc:capability>http://openconfig.net/yang/openconfig-types?module=openconfig-types&revision=2018-05-05</nc:capability>

<nc:capability>http://openconfig.net/yang/types/yang?module=openconfig-yang-types&revision=2018-04-24</nc:capability>

<nc:capability>http://openconfig.net/yang/platform-types?module=openconfig-platform-types&revision=2018-05-05</nc:capability>

<nc:capability>http://openconfig.net/yang/transport-types?module=openconfig-transport-types&revision=2018-05-16</nc:capability>

<nc:capability>http://openconfig.net/yang/alarms/types?module=openconfig-alarm-types&revision=2018-01-16</nc:capability>

<nc:capability>http://openconfig.net/yang/interfaces?module=openconfig-interfaces&revision=2018-04-24</nc:capability>

<nc:capability>http://openconfig.net/yang/interfaces/ethernet?module=openconfig-if-ethernet&revision=2018-04-10</nc:capability>

<nc:capability>http://openconfig.net/yang/platform?module=openconfig-platform&revision=2018-06-03</nc:capability>

<nc:capability>http://openconfig.net/yang/platform/port?module=openconfig-platform-port&revision=2018-01-20</nc:capability>

<nc:capability>http://openconfig.net/yang/platform/transceiver?module=openconfig-platform-transceiver&revision=2018-05-15</nc:capability>

<nc:capability>http://openconfig.net/yang/terminal-device?module=openconfig-terminal-device&revision=2017-07-08</nc:capability>

<nc:capability>http://openconfig.net/yang/platform/linecard?module=openconfig-platform-linecard&revision=2017-08-03</nc:capability>

<nc:capability>http://openconfig.net/yang/transport-line-common?module=openconfig-transport-line-common&revision=2017-09-08</nc:capability>

<nc:capability>http://openconfig.net/yang/optical-transport-line-protection?module=openconfig-transport-line-protection&revision=2017-09-08</nc:capability>

</nc:capabilities>

</nc:hello>

Device registration and topology discovery

Register transponder devices using onos-netcfg.

if you are running both ONOS and the transponders on you local machine you can simply use the file downloaded from github.

wget https://raw.githubusercontent.com/opennetworkinglab/ODTN-emulator/master/topo/without-tapi/device.json onos-netcfg localhost device.json

The content of the device.json is:

$ cat device.json

{

"devices" : {

"netconf:127.0.0.1:11002" : {

"basic" : {

"name":"cassini2",

"driver":"cassini-ocnos"

},

"netconf" : {

"ip" : "127.0.0.1",

"port" : "11002",

"username" : "root",

"password" : "root",

"idle-timeout" : "0"

}

},

"netconf:127.0.0.1:11003" : {

"basic" : {

"name":"cassini1",

"driver":"cassini-ocnos"

},

"netconf" : {

"ip" : "127.0.0.1",

"port" : "11003",

"username" : "root",

"password" : "root",

"idle-timeout" : "0"

}

}

}

}

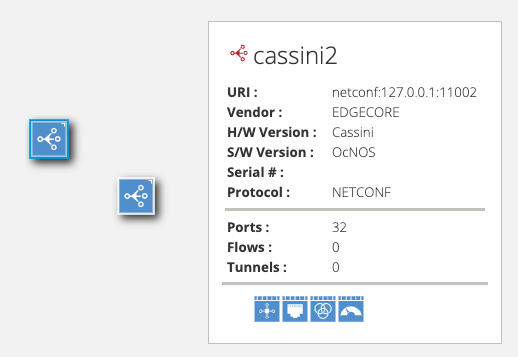

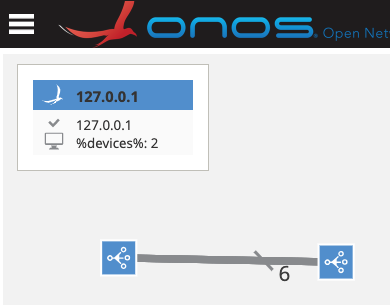

Check the registered devices and ports.

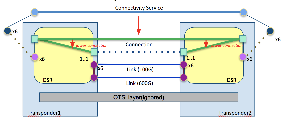

You can see these devices and ports from ONOS GUI as below:

Also, you can see the details on ONOS CLI as listed. Each Cassini emulator has 16 client-side ports and 16 line-side ports.

At the same time, device event and port event kick the topology discovery process.

Topology discovery process requests NETCONF <get> and gets the device config and operational state, then it generates TAPI Node/NEP/SIP corresponding to devices and their ports.

After device registration and discovery, we can see the TAPI topology state updated: <service-interface-point> and <topology-context> are filled.

onos> odtn-show-tapi-context

XML:

<context xmlns="urn:onf:otcc:yang:tapi-common">

<service-interface-point>

<uuid>5b05bb43-bd95-449f-a4ac-011b43f9d078</uuid>

<layer-protocol-name>PHOTONIC_MEDIA</layer-protocol-name>

<name>

<value-name>onos-cp</value-name>

<value>netconf:127.0.0.1:11002/209</value>

</name>

</service-interface-point>

....

<topology-context xmlns="urn:onf:otcc:yang:tapi-topology">

<topology>

<node>

<owned-node-edge-point>

<cep-list xmlns="urn:onf:otcc:yang:tapi-connectivity">

<connection-end-point>

<parent-node-edge-point>

......

</node>

<node>

<owned-node-edge-point>

<mapped-service-interface-point>

<service-interface-point-uuid>d525468e-f833-4604-b186-29752e76075b</service-interface-point-uuid>

</mapped-service-interface-point>

......

<uuid>29c557c2-3c5a-4ec2-bc90-21d823f66229</uuid>

</topology>

</topology-context>

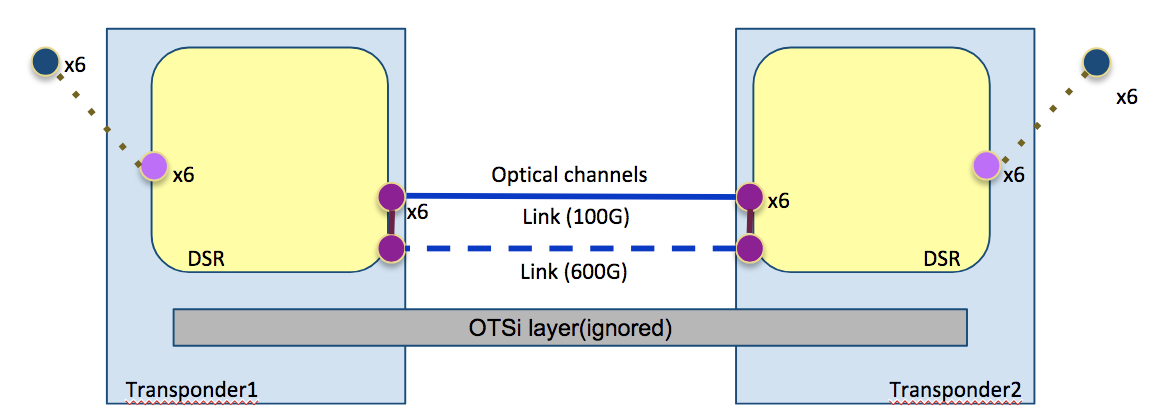

Currently, we don't have any link discovery feature, so we also need to register links between devices.

Temporary, all optical channels are represented as different links, and all optical channel endpoints are dealt with as ConnectPoint in onos. It might not be ideal and we should re-consider how to map TAPI topology models and onos models.

You can use the link file downloaded from github as below:

wget https://raw.githubusercontent.com/opennetworkinglab/ODTN-emulator/master/topo/without-tapi/link.json onos-netcfg localhost link.json

The content of this link json is:

$ cat link.json

{

"links": {

"netconf:127.0.0.1:11002/201-netconf:127.0.0.1:11003/201": {

"basic": {

"type": "OPTICAL",

"metric": 1,

"durable": true,

"bidirectional": true

}

},

"netconf:127.0.0.1:11002/202-netconf:127.0.0.1:11003/202": {

"basic": {

"type": "OPTICAL",

"metric": 1,

"durable": true,

"bidirectional": true

}

},

"netconf:127.0.0.1:11002/203-netconf:127.0.0.1:11003/203": {

"basic": {

"type": "OPTICAL",

"metric": 1,

"durable": true,

"bidirectional": true

}

},

"netconf:127.0.0.1:11002/204-netconf:127.0.0.1:11003/204": {

"basic": {

"type": "OPTICAL",

"metric": 1,

"durable": true,

"bidirectional": true

}

},

"netconf:127.0.0.1:11002/205-netconf:127.0.0.1:11003/205": {

"basic": {

"type": "OPTICAL",

"metric": 1,

"durable": true,

"bidirectional": true

}

},

"netconf:127.0.0.1:11002/206-netconf:127.0.0.1:11003/206": {

"basic": {

"type": "OPTICAL",

"metric": 1,

"durable": true,

"bidirectional": true

}

}

}

}

After that, we can find that links are registered and TAPI link are also set in DCS.

onf@root > links 17:25:21

src=netconf:127.0.0.1:11002/201, dst=netconf:127.0.0.1:11003/201, type=OPTICAL, state=ACTIVE, durable=true, metric=1.0, expected=true

src=netconf:127.0.0.1:11002/202, dst=netconf:127.0.0.1:11003/202, type=OPTICAL, state=ACTIVE, durable=true, metric=1.0, expected=true

src=netconf:127.0.0.1:11002/203, dst=netconf:127.0.0.1:11003/203, type=OPTICAL, state=ACTIVE, durable=true, metric=1.0, expected=true

src=netconf:127.0.0.1:11002/204, dst=netconf:127.0.0.1:11003/204, type=OPTICAL, state=ACTIVE, durable=true, metric=1.0, expected=true

src=netconf:127.0.0.1:11002/205, dst=netconf:127.0.0.1:11003/205, type=OPTICAL, state=ACTIVE, durable=true, metric=1.0, expected=true

src=netconf:127.0.0.1:11002/206, dst=netconf:127.0.0.1:11003/206, type=OPTICAL, state=ACTIVE, durable=true, metric=1.0, expected=true

src=netconf:127.0.0.1:11003/201, dst=netconf:127.0.0.1:11002/201, type=OPTICAL, state=ACTIVE, durable=true, metric=1.0, expected=true

src=netconf:127.0.0.1:11003/202, dst=netconf:127.0.0.1:11002/202, type=OPTICAL, state=ACTIVE, durable=true, metric=1.0, expected=true

src=netconf:127.0.0.1:11003/203, dst=netconf:127.0.0.1:11002/203, type=OPTICAL, state=ACTIVE, durable=true, metric=1.0, expected=true

src=netconf:127.0.0.1:11003/204, dst=netconf:127.0.0.1:11002/204, type=OPTICAL, state=ACTIVE, durable=true, metric=1.0, expected=true

src=netconf:127.0.0.1:11003/205, dst=netconf:127.0.0.1:11002/205, type=OPTICAL, state=ACTIVE, durable=true, metric=1.0, expected=true

src=netconf:127.0.0.1:11003/206, dst=netconf:127.0.0.1:11002/206, type=OPTICAL, state=ACTIVE, durable=true, metric=1.0, expected=true

onos> odtn-show-tapi-context

XML:

<context xmlns="urn:onf:otcc:yang:tapi-common">

<service-interface-point>

...

<topology>

<link>

<node-edge-point>

<node-uuid>d136bb1e-bad5-4cb3-bc94-e10831691ad3</node-uuid>

<topology-uuid>29c557c2-3c5a-4ec2-bc90-21d823f66229</topology-uuid>

<node-edge-point-uuid>ac02a247-d940-42f5-9b1b-c548cd4b86ff</node-edge-point-uuid>

</node-edge-point>

<uuid>65b2f0c1-8cd2-424e-96de-7e1da2de2cc1</uuid>

<node-edge-point>

<node-uuid>f6e8a2f4-4824-4702-beaf-4b48a4b3bf1f</node-uuid>

<topology-uuid>29c557c2-3c5a-4ec2-bc90-21d823f66229</topology-uuid>

<node-edge-point-uuid>206aec84-31e4-4337-b5fa-8558ed774804</node-edge-point-uuid>

</node-edge-point>

</link>

...

<node>

...

</topology>

...

</context>

All devices and links which needs for this demonstration are already registered, now we have following TAPI topology in DCS and we can use this stored topology and TAPI connectivity-service NBI

to request end-to-end path request.

We can also get the TAPI ServiceInterfacePoint via RESTCONF NBI. (There is a limitation, please check Available TAPI NBI endpoints)

# The content of empty-body.json is just {}.

$ curl -u onos:rocks -X POST -H Content-Type:application/json -T empty-body.json http://localhost:8181/onos/restconf/operations/tapi-common:get-service-interface-point-list | jq

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 3107 100 3104 100 3 15411 14 --:--:-- --:--:-- --:--:-- 15366

{

"tapi-common:output": {

"sip": [

{

"uuid": "01836dcd-7ceb-4c76-9900-3b8a8a44256b"

},

{

"uuid": "7ecae6a4-c2a0-4586-948a-7ea1cec9a24c"

},

{

"uuid": "cccf1c8f-b953-416f-a57b-e94eeb70da90"

},

{

"uuid": "59fbbd3b-5e03-4d20-bfb6-187d441726cb"

},

{

"uuid": "e60f4cd0-b705-4b48-926b-0be47a163b5b"

},

{

"uuid": "f04614ae-1395-408e-990b-b0dc49ce6fc6"

},

{

"uuid": "e73eb4a2-dcda-41ac-8073-126beebcbe9e"

},

{

"uuid": "4ddf0ae9-ca55-4e2f-9c2c-70e50ebe76f4"

},

......

}

}

Since devices/links registration and TAPI topology creation are done, we are ready to request path creation to ONOS/ODTN.

Create TAPI Connectivity Service

Send create-connectivity-service request to onos via script execute-tapi-post-call.py as listes:

# Create client-side service (Python 2.7.x) execute-tapi-post-call.py 127.0.0.1 tapi-connectivity:create-connectivity-service client-side

line-side service

You can also use script execute-tapi-post-call.py to create line-side service, just use command:

execute-tapi-post-call.py 127.0.0.1 tapi-connectivity:create-connectivity-service line-side

The output is :

There is no existed connectivity service inside ONOS.

Create client-side services:

- from netconf:127.0.0.1:11003/104 to netconf:127.0.0.1:11002/104.

This service should go through:

- netconf:127.0.0.1:11003/204 and netconf:127.0.0.1:11002/204.

The request context is: tapi-connectivity:create-connectivity-service.

The return message of the request is:

{"tapi-connectivity:output": {"service": {"connection": [{"connection-uuid": "92cff1b5-cfa9-4329-9dec-a007a3259109"}], "end-point": [{"service-interface-point": {"service-interface-point-uuid": "7be5a226-c27d-4815-b121-004a26af35e5"}, "local-id": "4515e51a-1567-42c0-a37f-71b9fe4f9d1b"}, {"service-interface-point": {"service-interface-point-uuid": "d25ac16a-c6ef-44a6-bc65-1e03eedb52e8"}, "local-id": "21b2dd0f-bc2e-40b9-af31-234e101e76df"}], "uuid": "4bb766b4-1e3e-48cb-a302-15f70a353a08"}}}

Checking the TAPI context, you can see following objects are created:

- New connectivity service you request

- Top level connection of the connectivity service and its endpoints(CEPs)

- Lower connections which are needed in order to build top level connection, and their endpoints(CEPs)

There are two ways to check the connectivity.

1. You can use curl to request connectivity-service, and get the output:

$curl -u onos:rocks -X POST -H Content-Type:application/json -T empty-body.json http://localhost:8181/onos/restconf/operations/tapi-connectivity:get-connectivity-service-list | jq

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 481 100 478 100 3 2591 16 --:--:-- --:--:-- --:--:-- 2597

{

"tapi-connectivity:output": {

"service": [

{

"connection": [

{

"connection-uuid": "92cff1b5-cfa9-4329-9dec-a007a3259109"

}

],

"end-point": [

{

"local-id": "21b2dd0f-bc2e-40b9-af31-234e101e76df",

"service-interface-point": {

"service-interface-point-uuid": "d25ac16a-c6ef-44a6-bc65-1e03eedb52e8"

}

},

{

"local-id": "4515e51a-1567-42c0-a37f-71b9fe4f9d1b",

"service-interface-point": {

"service-interface-point-uuid": "7be5a226-c27d-4815-b121-004a26af35e5"

}

}

],

"uuid": "4bb766b4-1e3e-48cb-a302-15f70a353a08"

}

]

}

}

2. you can use odtn-show-tapi-context to see the full connectivity context:

onos> odtn-show-tapi-context

XML:

...

</service-interface-point>

<connectivity-context xmlns="urn:onf:otcc:yang:tapi-connectivity">

<connection>

<connection-end-point>

<node-edge-point-uuid>698ee55b-f983-4674-b10d-48171f2772dc</node-edge-point-uuid>

<topology-uuid>64498305-73ff-487b-bb63-4c2921335604</topology-uuid>

<node-uuid>66e83ebf-b44f-4967-828b-6a348d9722ef</node-uuid>

<connection-end-point-uuid>81f568bd-6260-45f3-991b-ae6dc0059419</connection-end-point-uuid>

</connection-end-point>

<uuid>2a1683da-7015-4cde-92c8-5f43d8dd3f9b</uuid>

<route>

<local-id>cd23e8a9-ba72-45ab-bc7b-7b498a38aab4</local-id>

</route>

<connection-end-point>

<node-edge-point-uuid>dcd09ae9-03d3-499f-a576-6a6e0c5da343</node-edge-point-uuid>

<topology-uuid>64498305-73ff-487b-bb63-4c2921335604</topology-uuid>

<node-uuid>66e83ebf-b44f-4967-828b-6a348d9722ef</node-uuid>

<connection-end-point-uuid>8a3640ad-6748-467e-b5e8-0414d1642145</connection-end-point-uuid>

</connection-end-point>

</connection>

<connection>

<route>

<local-id>a8beb725-2fc3-466e-8dca-a0c7840d678b</local-id>

</route>

<uuid>a69d88a0-4b01-4ce2-a342-95ae69834481</uuid>

<connection-end-point>

<node-edge-point-uuid>19b790e5-afb2-4967-b43a-296de5a30165</node-edge-point-uuid>

<topology-uuid>64498305-73ff-487b-bb63-4c2921335604</topology-uuid>

<node-uuid>cb99dbc3-1b8f-4cc2-98f1-d71714e0217d</node-uuid>

<connection-end-point-uuid>bf1fc653-2012-4696-88d7-3b52ae00127e</connection-end-point-uuid>

</connection-end-point>

<connection-end-point>

<node-edge-point-uuid>c92a5b72-bc42-4117-b2a3-0cad464a5ac7</node-edge-point-uuid>

<topology-uuid>64498305-73ff-487b-bb63-4c2921335604</topology-uuid>

<node-uuid>cb99dbc3-1b8f-4cc2-98f1-d71714e0217d</node-uuid>

<connection-end-point-uuid>630ceaf8-5df1-4991-ad0c-fc53ee11e03a</connection-end-point-uuid>

</connection-end-point>

</connection>

<connectivity-service>

<end-point>

<local-id>21b2dd0f-bc2e-40b9-af31-234e101e76df</local-id>

<service-interface-point>

<service-interface-point-uuid>d25ac16a-c6ef-44a6-bc65-1e03eedb52e8</service-interface-point-uuid>

</service-interface-point>

</end-point>

<uuid>4bb766b4-1e3e-48cb-a302-15f70a353a08</uuid>

<connection>

<connection-uuid>92cff1b5-cfa9-4329-9dec-a007a3259109</connection-uuid>

</connection>

<end-point>

<local-id>4515e51a-1567-42c0-a37f-71b9fe4f9d1b</local-id>

<service-interface-point>

<service-interface-point-uuid>7be5a226-c27d-4815-b121-004a26af35e5</service-interface-point-uuid>

</service-interface-point>

</end-point>

</connectivity-service>

<connection>

<route>

<local-id>bbe12ceb-8bd4-4b5e-ad32-ff04ba07ba3a</local-id>

<connection-end-point>

<node-edge-point-uuid>698ee55b-f983-4674-b10d-48171f2772dc</node-edge-point-uuid>

<topology-uuid>64498305-73ff-487b-bb63-4c2921335604</topology-uuid>

<node-uuid>66e83ebf-b44f-4967-828b-6a348d9722ef</node-uuid>

<connection-end-point-uuid>81f568bd-6260-45f3-991b-ae6dc0059419</connection-end-point-uuid>

</connection-end-point>

<connection-end-point>

<node-edge-point-uuid>19b790e5-afb2-4967-b43a-296de5a30165</node-edge-point-uuid>

<topology-uuid>64498305-73ff-487b-bb63-4c2921335604</topology-uuid>

<node-uuid>cb99dbc3-1b8f-4cc2-98f1-d71714e0217d</node-uuid>

<connection-end-point-uuid>bf1fc653-2012-4696-88d7-3b52ae00127e</connection-end-point-uuid>

</connection-end-point>

<connection-end-point>

<node-edge-point-uuid>c92a5b72-bc42-4117-b2a3-0cad464a5ac7</node-edge-point-uuid>

<topology-uuid>64498305-73ff-487b-bb63-4c2921335604</topology-uuid>

<node-uuid>cb99dbc3-1b8f-4cc2-98f1-d71714e0217d</node-uuid>

<connection-end-point-uuid>630ceaf8-5df1-4991-ad0c-fc53ee11e03a</connection-end-point-uuid>

</connection-end-point>

<connection-end-point>

<node-edge-point-uuid>dcd09ae9-03d3-499f-a576-6a6e0c5da343</node-edge-point-uuid>

<topology-uuid>64498305-73ff-487b-bb63-4c2921335604</topology-uuid>

<node-uuid>66e83ebf-b44f-4967-828b-6a348d9722ef</node-uuid>

<connection-end-point-uuid>8a3640ad-6748-467e-b5e8-0414d1642145</connection-end-point-uuid>

</connection-end-point>

</route>

<connection-end-point>

<node-edge-point-uuid>698ee55b-f983-4674-b10d-48171f2772dc</node-edge-point-uuid>

<topology-uuid>64498305-73ff-487b-bb63-4c2921335604</topology-uuid>

<node-uuid>66e83ebf-b44f-4967-828b-6a348d9722ef</node-uuid>

<connection-end-point-uuid>81f568bd-6260-45f3-991b-ae6dc0059419</connection-end-point-uuid>

</connection-end-point>

<uuid>92cff1b5-cfa9-4329-9dec-a007a3259109</uuid>

<connection-end-point>

<node-edge-point-uuid>c92a5b72-bc42-4117-b2a3-0cad464a5ac7</node-edge-point-uuid>

<topology-uuid>64498305-73ff-487b-bb63-4c2921335604</topology-uuid>

<node-uuid>cb99dbc3-1b8f-4cc2-98f1-d71714e0217d</node-uuid>

<connection-end-point-uuid>630ceaf8-5df1-4991-ad0c-fc53ee11e03a</connection-end-point-uuid>

</connection-end-point>

<lower-connection>

<connection-uuid>a69d88a0-4b01-4ce2-a342-95ae69834481</connection-uuid>

</lower-connection>

<lower-connection>

<connection-uuid>2a1683da-7015-4cde-92c8-5f43d8dd3f9b</connection-uuid>

</lower-connection>

</connection>

</connectivity-context>

...

Delete TAPI Connectivity Service

You can use the script execute-tapi-delete-call.py to delete created connectivity. If the client-side service is created, the deletion operation and its output is :

$ execute-tapi-delete-call.py 127.0.0.1 both

The json content of deletion operation for connectivity service is

{"tapi-connectivity:input": {"service-id-or-name": "4bb766b4-1e3e-48cb-a302-15f70a353a08"}}.

Returns json string for deletion operations is

<Response [200]>

If you run "odtn-show-tapi-context" from ONOS CLI, you can find that all TAPI objects related to the deleted connectivity-service are removed.

Also you can see that the remote device received following edit-config request and updated its running config. The config which has created at the create-connectivity-service request are deleted.