Table of Contents

Introduction

You will need:

- ONOS clusters installed and running, one for SONA and the others for vRouter. ONOS for vRouter runs on every gateway node.

- An OpenStack service installed and running ("stable/mitaka" version is used here)

Note that this instructions assume you’re familiar with ONOS and OpenStack, and do not provide a guide to how to install or trouble shooting these services. However, If you aren’t, please find a guide from ONOS(http://wiki.onosproject.org) and OpenStack(http://docs.openstack.org), respectively.

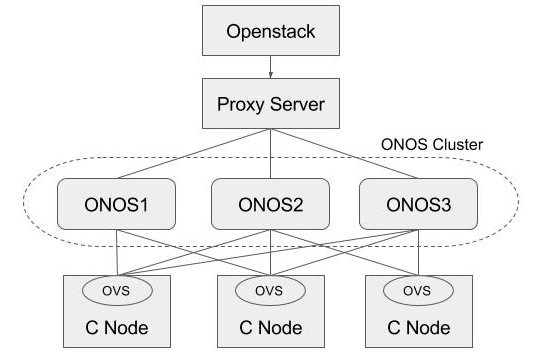

The example deployment depicted in the above figure uses three networks.

- Management network: used for ONOS to control virtual switches, and OpenStack to communicate with nova-compute agent running on the compute node

- Data network: used for East-West traffic via VXLAN tunnel

- External network: used for North-South traffic, normally only the gateway node has a connectivity to this network

If you don't have enough network interfaces in your test environment, you can share the networks. You can also emulate the external router. The figure below shows one possible test environment used in the rest of this guide, where no actual external router exists but emulated one with Docker container.

Prerequisite

1. Install OVS to all nodes including compute and gateway. Make sure your OVS version is 2.3.0 or later. This guide works very well for me (don't forget to change the version in the guide to 2.3.0 or later).

2. Set OVSDB listening mode in your compute nodes. There are two ways. "compute_node_ip" below should be accessible address from the ONOS instance.

$ ovs-appctl -t ovsdb-server ovsdb-server/add-remote ptcp:6640:compute_node_ip

Or you can make the setting permanent by adding the following line to /usr/share/openvswitch/scripts/ovs-ctl, right after "set ovsdb-server "$DB_FILE" line. You need to restart the openvswitch-switch service.

set "$@" --remote=ptcp:6640

Either way, you should be able to see port "6640" is in listening state.

$ netstat -ntl Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN tcp 0 0 0.0.0.0:6640 0.0.0.0:* LISTEN tcp6 0 0 :::22

3. Check your OVSDB. It is okay If there's a bridge with name br-int, but note that SONA will add or update its controller, DPID, and fail mode.

$ sudo ovs-vsctl show

cedbbc0a-f9a4-4d30-a3ff-ef9afa813efb

ovs_version: "2.5.0"

ONOS-SONA Setup

1. Refer to the guide(SONA Network Configuration Guide) and write a network configuration for SONA. Place the network-cfg.json under tools/package/config/, build package, and then install ONOS. Here's an example cell configuration and commands.

onos$ cell ONOS_CELL=sona OCI=10.134.231.29 OC1=10.134.231.29 ONOS_APPS=drivers,openflow-base,openstackswitching,openstackrouting ONOS_GROUP=sdn ONOS_TOPO=default ONOS_USER=sdn ONOS_WEB_PASS=rocks ONOS_WEB_USER=onos onos$ buck build onos onos$ cp ~/network-cfg.json ~/onos/tools/package/config/ onos$ onos-package onos$ stc setup

Make sure to activate "only" the following ONOS applications.

ONOS_APPS=drivers,openflow-base,openstackswitching

If you want Neutron L3 service, enable openstackrouting, too.

ONOS_APPS=drivers,openflow-base,openstackswitching,openstackrouting

2. Check all the applications are activated successfully.

onos> apps -s -a * 4 org.onosproject.dhcp 1.7.0.SNAPSHOT DHCP Server App * 6 org.onosproject.optical-model 1.7.0.SNAPSHOT Optical information model * 12 org.onosproject.openflow-base 1.7.0.SNAPSHOT OpenFlow Provider * 19 org.onosproject.ovsdb-base 1.7.0.SNAPSHOT OVSDB Provider * 22 org.onosproject.drivers.ovsdb 1.7.0.SNAPSHOT OVSDB Device Drivers * 27 org.onosproject.openstackinterface 1.7.0.SNAPSHOT OpenStack Interface App * 28 org.onosproject.openstacknode 1.7.0.SNAPSHOT OpenStack Node Bootstrap App * 29 org.onosproject.scalablegateway 1.7.0.SNAPSHOT Scalable GW App * 30 org.onosproject.openstackrouting 1.7.0.SNAPSHOT OpenStack Routing App * 44 org.onosproject.openstackswitching 1.7.0.SNAPSHOT OpenStack Switching App * 50 org.onosproject.drivers 1.7.0.SNAPSHOT Default device drivers

OpenStack Setup

How to deploy OpenStack is out of scope of this documentation. Here, it only describes some configurations related to use SONA. All other settings are completely up to your environment.

Controller Node

1. Install networking-onos (Neutron ML2 plugin for ONOS) first.

$ git clone https://github.com/openstack/networking-onos.git $ cd networking-onos $ sudo python setup.py install

2. Specify ONOS access information. You may want to copy the config file to /etc/neutron/plugins/ml2/ where the other Neutron configuration files are.

# Configuration options for ONOS ML2 Mechanism driver [onos] # (StrOpt) ONOS ReST interface URL. This is a mandatory field. url_path = http://10.134.231.29:8181/onos/openstacknetworking # (StrOpt) Username for authentication. This is a mandatory field. username = onos # (StrOpt) Password for authentication. This is a mandatory field. password = rocks

URL path is changed from "onos/openstackswitching" to "onos/openstacknetworking" since 1.8.0.

3. Next step is to install and run OpenStack services. For DevStack users, use this sample DevStack local.conf to build OpenStack controller node. Make sure your DevStack branch is consistent with the OpenStack branches, "stable/mitaka" in this example.

[[local|localrc]] HOST_IP=10.134.231.28 SERVICE_HOST=10.134.231.28 RABBIT_HOST=10.134.231.28 DATABASE_HOST=10.134.231.28 Q_HOST=10.134.231.28 ADMIN_PASSWORD=nova DATABASE_PASSWORD=$ADMIN_PASSWORD RABBIT_PASSWORD=$ADMIN_PASSWORD SERVICE_PASSWORD=$ADMIN_PASSWORD SERVICE_TOKEN=$ADMIN_PASSWORD DATABASE_TYPE=mysql # Log SCREEN_LOGDIR=/opt/stack/logs/screen # Images IMAGE_URLS="http://cloud-images.ubuntu.com/releases/14.04/release/ubuntu-14.04-server-cloudimg-amd64.tar.gz,http://www.planet-lab.org/cord/trusty-server-multi-nic.img" FORCE_CONFIG_DRIVE=True # Networks Q_ML2_TENANT_NETWORK_TYPE=vxlan Q_ML2_PLUGIN_MECHANISM_DRIVERS=onos_ml2 Q_PLUGIN_EXTRA_CONF_PATH=/opt/stack/networking-onos/etc Q_PLUGIN_EXTRA_CONF_FILES=(conf_onos.ini) ML2_L3_PLUGIN=networking_onos.plugins.l3.driver.ONOSL3Plugin NEUTRON_CREATE_INITIAL_NETWORKS=False # Services enable_service q-svc disable_service n-net disable_service n-cpu disable_service tempest disable_service c-sch disable_service c-api disable_service c-vol # Branches GLANCE_BRANCH=stable/mitaka HORIZON_BRANCH=stable/mitaka KEYSTONE_BRANCH=stable/mitaka NEUTRON_BRANCH=stable/mitaka NOVA_BRANCH=stable/mitaka

If you use other deploy tools or build the controller node manually, please set the following configurations to Nova and Neutron configuration files. Set Neutron to use ONOS ML2 plugin and ONOS L3 service plugin.

core_plugin = neutron.plugins.ml2.plugin.Ml2Plugin service_plugins = networking_onos.plugins.l3.driver.ONOSL3Plugin dhcp_agent_notification = False

[ml2] tenant_network_types = vxlan type_drivers = vxlan mechanism_drivers = onos_ml2 [securitygroup] enable_security_group = True

Set Nova to use config drive for metadata service, so that we don't need to launch Neutron metadata-agent. And of course, set to use Neutron for network service.

[DEFAULT] force_config_drive = True network_api_class = nova.network.neutronv2.api.API security_group_api = neutron [neutron] url = http://10.134.231.28:9696 auth_strategy = keystone admin_auth_url = http://10.134.231.28:35357/v2.0 admin_tenant_name = service admin_username = neutron admin_password = [admin passwd]

Don't forget to specify conf_onos.ini when you start Neutron service.

/usr/bin/python /usr/local/bin/neutron-server --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini --config-file /opt/stack/networking-onos/etc/conf_onos.ini

Compute node

No special configurations are required for compute node other than setting network api to Neutron. For DevStack users, here's sample DevStack local.conf.

[[local|localrc]] HOST_IP=10.134.231.30 SERVICE_HOST=10.134.231.28 RABBIT_HOST=10.134.231.28 DATABASE_HOST=10.134.231.28 ADMIN_PASSWORD=nova DATABASE_PASSWORD=$ADMIN_PASSWORD RABBIT_PASSWORD=$ADMIN_PASSWORD SERVICE_PASSWORD=$ADMIN_PASSWORD SERVICE_TOKEN=$ADMIN_PASSWORD DATABASE_TYPE=mysql NOVA_VNC_ENABLED=True VNCSERVER_PROXYCLIENT_ADDRESS=$HOST_IP VNCSERVER_LISTEN=$HOST_IP LIBVIRT_TYPE=kvm # Log SCREEN_LOGDIR=/opt/stack/logs/screen # Services ENABLED_SERVICES=n-cpu,neutron # Branches NOVA_BRANCH=stable/mitaka KEYSTONE_BRANCH=stable/mitaka NEUTRON_BRANCH=stable/mitaka

If your compute node is a VM, try http://docs.openstack.org/developer/devstack/guides/devstack-with-nested-kvm.html this first or set LIBVIRT_TYPE=qemu. Nested KVM is much faster than qemu, if possible.

For manual set ups, set Nova to use Neutron as a network API.

[DEFAULT] force_config_drive = True network_api_class = nova.network.neutronv2.api.API security_group_api = neutron [neutron] url = http://10.134.231.28:9696 auth_strategy = keystone admin_auth_url = http://10.134.231.28:35357/v2.0 admin_tenant_name = service admin_username = neutron admin_password = [admin passwd]

Gateway node

No OpenStack service needs to be running on gateway nodes.

Node and ONOS-vRouter Setup

Single Gateway Node Setup

1. Push network-cfg.json after ONOS-SONA and OpenStack are ready, and check all COMPUTE type node state is COMPLETE with openstack-nodes command. Use openstack-node-check command for more detailed states of the node if it's INCOMPLETE. For GATEWAY type node, leave it in DEVICE_CREATED state. You'll need additional configurations explained later.

$ curl --user onos:rocks -X POST -H "Content-Type: application/json" http://10.134.231.29:8181/onos/v1/network/configuration/ -d @network-cfg.json

onos> openstack-nodes hostname=compute-01, type=COMPUTE, managementIp=10.134.231.30, dataIp=10.134.34.222, intBridge=of:00000000000000a1, routerBridge=Optional.empty init=COMPLETE hostname=compute-02, type=COMPUTE, managementIp=10.134.231.31, dataIp=10.134.34.223, intBridge=of:00000000000000a2, routerBridge=Optional.empty init=COMPLETE hostname=gateway-01, type=GATEWAY, managementIp=10.134.231.32, dataIp=10.134.33.224, intBridge=of:00000000000000a3, routerBridge=Optional[of:00000000000000b1] init=DEVICE_CREATED Total 3 nodes

For your information, pushing network config file triggers reinitialization of all nodes at once. It's no harm to reinitialize COMPLETE state node. If you want to reinitialize only a particular compute node, use openstack-node-init command with hostname.

2. In GATEWAY type nodes, Quagga and additional ONOS instance for vRouter is required. Download and install Docker first.

$ wget -qO- https://get.docker.com/ | sudo sh

3. Download script to help setup gateway node.

$ git clone https://github.com/hyunsun/sona-setup.git $ cd sona-setup

4. At first, write config file for setting up gateway node. Name it vRouterConfig.ini, place under sona-setup.

[DEFAULT] routerBridge = "of:00000000000000b1" #Unique device ID of the router bridge floatingCidr = "172.27.0.0/24" #Floating IP ranges dummyHostIp = "172.27.0.1" #Gateway IP address of the floating IP subnet quaggaMac = "fe:00:00:00:00:01" #Quagga MAC address quaggaIp = "172.18.0.254/30" #Quagga instance IP address gatewayName = "gateway-01" #Quagga instance name bgpNeighborIp = "172.18.0.253/30" #IP address of the external BGP router that quagga instance peered to asNum = 65101 #AS number of Quagga instance peerAsNum = 65100 #AS number of external BGP router uplinkPortNum = "26" #Port number of uplink interface

5. Next, run createJsonAndvRouter.sh which automatically create vrouter.json and runs ONOS container with vRouter application activated.

sona-setup$ ./createJsonAndvRouter.sh sona-setup$ sudo docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES e5ac67e62bbb onosproject/onos:1.6 "./bin/onos-service" 9 days ago Up 9 days 6653/tcp, 8101/tcp, 8181/tcp, 9876/tcp onos-vrouter

6. Next, run createQuagga.sh which automatically create volumes/gateway/zebra.conf and volumes/gateway/bgpd.conf, update vrouter.json and run Quagga container based on those config files.

sona-setup$ ./createQuagga.sh sona-setup$ sudo docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 978dadf41240 onosproject/onos:1.6 "./bin/onos-service" 11 hours ago Up 11 hours 6653/tcp, 8101/tcp, 8181/tcp, 9876/tcp onos-vrouter 5bf4f2d59919 hyunsun/quagga-fpm "/usr/bin/supervisord" 11 hours ago Up 11 hours 179/tcp, 2601/tcp, 2605/tcp gateway-01

! -*- bgp -*- ! ! BGPd sample configuration file ! ! hostname gateway-01 password zebra ! router bgp 65101 bgp router-id 172.18.0.254 timers bgp 3 9 neighbor 172.18.0.253 remote-as 65100 neighbor 172.18.0.253 ebgp-multihop neighbor 172.18.0.253 timers connect 5 neighbor 172.18.0.253 advertisement-interval 5 network 172.27.0.0/24 ! log file /var/log/quagga/bgpd.log

! hostname gateway-01 password zebra ! fpm connection ip 172.17.0.2 port 2620

Note that the FPM connection IP should be the ONOS-vRouter container's eth0 IP address. Docker increments the IP address whenever a new container created, so normally it would be 172.17.0.2 but if you have problem with FPM connection later, please check this IP is correct.

If you check the result of ovs-ofctl show, there should be a new port named quagga on br-router bridge.

sona-setup$ sudo ovs-ofctl show br-router

OFPT_FEATURES_REPLY (xid=0x2): dpid:00000000000000b1

n_tables:254, n_buffers:256

capabilities: FLOW_STATS TABLE_STATS PORT_STATS QUEUE_STATS ARP_MATCH_IP

actions: output enqueue set_vlan_vid set_vlan_pcp strip_vlan mod_dl_src mod_dl_dst mod_nw_src mod_nw_dst mod_nw_tos mod_tp_src mod_tp_dst

1(patch-rout): addr:1a:46:69:5a:8e:f6

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

2(quagga): addr:7a:9b:05:57:2c:ff

config: 0

state: 0

current: 10GB-FD COPPER

speed: 10000 Mbps now, 0 Mbps max

LOCAL(br-router): addr:1a:13:72:57:4a:4d

config: PORT_DOWN

state: LINK_DOWN

speed: 0 Mbps now, 0 Mbps max

OFPT_GET_CONFIG_REPLY (xid=0x4): frags=normal miss_send_len=0

7. If there's no external router or emulation of it in your setup, add another Quagga container which acts as an external router. You can just run createQuaggaRouter.sh which automatically volumes/gateway/zebra.conf and volumes/gateway/bgpd.conf, modify vrouter.json and vRouterConfig.ini, and run Quagga router instance.

sona-setup$ ./createQuaggaRouter.sh sona-setup$ sudo docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 978dadf41240 onosproject/onos:1.6 "./bin/onos-service" 11 hours ago Up 11 hours 6653/tcp, 8101/tcp, 8181/tcp, 9876/tcp onos-vrouter 32b10a038d78 hyunsun/quagga-fpm "/usr/bin/supervisord" 11 hours ago Up 11 hours 179/tcp, 2601/tcp, 2605/tcp router-01 5bf4f2d59919 hyunsun/quagga-fpm "/usr/bin/supervisord" 11 hours ago Up 11 hours 179/tcp, 2601/tcp, 2605/tcp gateway-01

! -*- bgp -*- ! ! BGPd sample configuration file ! ! hostname router-01 password zebra ! router bgp 65100 bgp router-id 172.18.0.253 timers bgp 3 9 neighbor 172.18.0.254 remote-as 65101 neighbor 172.18.0.254 ebgp-multihop neighbor 172.18.0.254 timers connect 5 neighbor 172.18.0.254 advertisement-interval 5 neighbor 172.18.0.254 default-originate ! log file /var/log/quagga/bgpd.log

! hostname router-01 password zebra !

If you check the result of ovs-ofctl show, there should be a new port named quagga-router on br-router bridge.

7. It's time to fix the port numbers in the ONOS-vRouter network configuration (vrouter.json file). Now you should be able to find all OpenFlow port number required to set the configuration with the "ovs-ofctl show br-router" command. Please fix the port numbers appropriately as below.

$ sudo ovs-ofctl show br-router

OFPT_FEATURES_REPLY (xid=0x2): dpid:00000000000000b1

n_tables:254, n_buffers:256

capabilities: FLOW_STATS TABLE_STATS PORT_STATS QUEUE_STATS ARP_MATCH_IP

actions: output enqueue set_vlan_vid set_vlan_pcp strip_vlan mod_dl_src mod_dl_dst mod_nw_src mod_nw_dst mod_nw_tos mod_tp_src mod_tp_dst

1(patch-rout): addr:96:67:91:a4:24:f7

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

24(quagga): addr:52:b2:b0:0f:b1:5b

config: 0

state: 0

current: 10GB-FD COPPER

speed: 10000 Mbps now, 0 Mbps max

25(quagga-router): addr:1a:64:b1:37:c7:a6

config: 0

state: 0

current: 10GB-FD COPPER

speed: 10000 Mbps now, 0 Mbps max

LOCAL(br-router): addr:5a:59:0a:9f:0f:4c

config: PORT_DOWN

state: LINK_DOWN

speed: 0 Mbps now, 0 Mbps max

OFPT_GET_CONFIG_REPLY (xid=0x4): frags=normal miss_send_len=0

"apps" : {

"org.onosproject.router" : {

"router" : {

"controlPlaneConnectPoint" : "of:00000000000000b1/24",

"ospfEnabled" : "true",

"interfaces" : [ "b1-1", "b1-2" ]

}

}

},

"ports" : {

"of:00000000000000b1/25" : {

"interfaces" : [

{

"name" : "b1-1",

"ips" : [ "172.18.0.254/30" ],

"mac" : "fe:00:00:00:00:01"

}

]

},

"of:00000000000000b1/1" : {

"interfaces" : [

{

"name" : "b1-2",

"ips" : [ "172.27.0.254/24" ],

"mac" : "fe:00:00:00:00:01"

}

]

}

},

"hosts" : {

"fe:00:00:00:00:02/-1" : {

"basic": {

"ips": ["172.27.0.1"],

"location": "of:00000000000000b1/1"

}

}

}

- Line 4: Device ID and port number of the port with portName=

quagga->controlPlaneConnectPoint - Line 11: Device ID and port number of the port with portName=

quagga-routeror other actualuplink port.

If you have a floating range, 172.27.0.0/24 in this example, check the following configurations also.

- Line 20: (optional interface config for floating IP address range) Device ID and port number of the port with portName=

patch-rout - Line 34: (optional interface config for floating IP gateway) Device ID and port number of the port with portName=

patch-rout

And then restart the vrouter container again by simply running vrouter.sh.

sona-setup$ vrouter.sh sona-setup$ sudo docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 3cdbf6a76d10 onosproject/onos:1.6 "./bin/onos-service" About a minute ago Up 59 seconds 6653/tcp, 8101/tcp, 8181/tcp, 9876/tcp onos-vrouter d5763d29afc4 hyunsun/quagga-fpm "/usr/bin/supervisord" 3 minutes ago Up 3 minutes 179/tcp, 2601/tcp, 2605/tcp router-01 74f429f98174 hyunsun/quagga-fpm "/usr/bin/supervisord" 4 minutes ago Up 4 minutes 179/tcp, 2601/tcp, 2605/tcp gateway-01

8. If everything's right, check fpm-connections, hosts and routes. 172.18.0.253 is the external default gateway in this example. If you added interface and host for floating IP range, you should be able to see the host in the list.

onos> hosts id=FA:00:00:00:00:01/None, mac=FA:00:00:00:00:01, location=of:00000000000000b1/25, vlan=None, ip(s)=[172.18.0.253] id=FE:00:00:00:00:02/None, mac=FE:00:00:00:00:02, location=of:00000000000000b1/1, vlan=None, ip(s)=[172.27.0.1], name=FE:00:00:00:00:02/None onos> fpm-connections 172.17.0.3:52332 connected since 6m ago onos> next-hops ip=172.18.0.253, mac=FA:00:00:00:00:01, numRoutes=1 onos> routes Table: ipv4 Network Next Hop 0.0.0.0/0 172.18.0.253 Total: 1 Table: ipv6 Network Next Hop Total: 0

8. Add route for floating IP range manually and check the route is added.

onos> route-add 172.27.0.0/24 172.27.0.1 onos> routes Table: ipv4 Network Next Hop 0.0.0.0/0 172.18.0.253 172.27.0.0/24 172.27.0.1 Total: 2 Table: ipv6 Network Next Hop Total: 0 onos> next-hops ip=172.18.0.253, mac=FA:00:00:00:00:01, numRoutes=1 ip=172.27.0.1, mac=FE:00:00:00:00:02, numRoutes=1

9. Now you should be able to see the gateway node is in COMPLETE state when you re-trigger node initialization. You can either run command openstack-node-init gateway-01 or push the network configuration file again.

onos> openstack-node-init gateway-01

Or

$ curl --user onos:rocks -X POST -H "Content-Type: application/json" http://10.134.231.29:8181/onos/v1/network/configuration/ -d @network-cfg.json

onos> openstack-nodes hostname=compute-01, type=COMPUTE, managementIp=10.134.231.30, dataIp=10.134.34.222, intBridge=of:00000000000000a1, routerBridge=Optional.empty init=COMPLETE hostname=compute-02, type=COMPUTE, managementIp=10.134.231.31, dataIp=10.134.34.223, intBridge=of:00000000000000a2, routerBridge=Optional.empty init=COMPLETE hostname=gateway-01, type=GATEWAY, managementIp=10.134.231.32, dataIp=10.134.33.224, intBridge=of:00000000000000a3, routerBridge=Optional[of:00000000000000b1] init=COMPLETE hostname=gateway-02, type=GATEWAY, managementIp=10.134.231.33, dataIp=10.134.33.225, intBridge=of:00000000000000a4, routerBridge=Optional[of:00000000000000b2] init=COMPLETE Total 4 nodes

Multiple Gateway Nodes Setup

SONA allows multiple gateway nodes for HA as well as scalability. Here's another example of multiple gateway nodes and external upstream router. In this scenario, each gateway node should have unique IP and MAC address for peering so that the upstream router can handle each of them as a different router. ONOS scalable gateway application is responsible for taking upstream packet through one of the gateway nodes, and the upstream router is responsible for taking downstream packet through one of the gateway nodes.

The following is an example Quagga configuration of the second gateway node.

! -*- bgp -*- ! ! BGPd sample configuration file ! ! hostname gateway-02 password zebra ! router bgp 65101 bgp router-id 172.18.0.250 timers bgp 3 9 neighbor 172.18.0.249 remote-as 65100 neighbor 172.18.0.249 ebgp-multihop neighbor 172.18.0.249 timers connect 5 neighbor 172.18.0.249 advertisement-interval 5 network 172.27.0.0/24 ! log file /var/log/quagga/bgpd.log

! hostname gateway-02 password zebra ! fpm connection ip 172.17.0.2 port 2620

Once you are done with the configurations for the second Quaaga, run quagga.sh script to bring up Quagga container with the MAC address different from the one used for the first gateway node Quagga container.

$ ./quagga.sh --name=gateway-02 --ip=172.18.0.250/30 --mac=fe:00:00:00:00:03

You also need to run ONOS-vRouter in the second gateway node. Don't forget to set correct IP and MAC address, 172.18.0.250 and fe:00:00:00:00:03, instead of 172.18.0.254 and fe:00:00:00:00:01 in the above network config example for successful peering.

"apps" : {

"org.onosproject.router" : {

"router" : {

"controlPlaneConnectPoint" : "of:00000000000000b2/2",

"ospfEnabled" : "true",

"interfaces" : [ "b1-1", "b1-2" ]

}

}

},

"ports" : {

"of:00000000000000b2/3" : {

"interfaces" : [

{

"name" : "b1-1",

"ips" : [ "172.18.0.250/30" ],

"mac" : "fe:00:00:00:00:03"

}

]

},

"of:00000000000000b2/1" : {

"interfaces" : [

{

"name" : "b1-2",

"ips" : [ "172.27.0.254/24" ],

"mac" : "fe:00:00:00:00:01"

}

]

}

},

"hosts" : {

"fe:00:00:00:00:02/-1" : {

"basic": {

"ips": ["172.27.0.1"],

"location": "of:00000000000000b2/1"

}

}

}

$ vrouter.sh

Now configure the upstream router as below.

router bgp 65100 timers bgp 3 9 distance bgp 20 200 200 maximum-paths 2 ecmp 2 neighbor 172.18.0.254 remote-as 65101 neighbor 172.18.0.254 maximum-routes 12000 neighbor 172.18.0.250 remote-as 65101 neighbor 172.18.0.250 maximum-routes 12000 redistribute connected

#routed port connected to gateway-01 interface Ethernet43 no switchport ip address 172.18.0.253/30 #routed port connected to gateway-02 interface Ethernet44 no switchport ip address 172.18.0.249/30

HA Setup

Basically, ONOS itself provides HA by default when there are multiple instances in the cluster. This section describes how to add a proxy server beyond the ONOS cluster, and make use of it in Neutron as a single access point of the cluster. For the proxy server, we used the HA proxy server (http://www.haproxy.org) here.

1. Install HA proxy.

$ sudo add-apt-repository -y ppa:vbernat/haproxy-1.5 $ sudo add-apt-repository -y ppa:vbernat/haproxy-1.5 $ sudo apt-get update $ sudo apt-get install -y haproxy

2. Configure HA proxy.

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin

stats timeout 30s

user haproxy

group haproxy

daemon

# Default SSL material locations

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

# Default ciphers to use on SSL-enabled listening sockets.

# For more information, see ciphers(1SSL). This list is from:

# https://hynek.me/articles/hardening-your-web-servers-ssl-ciphers/

ssl-default-bind-ciphers ECDH+AESGCM:DH+AESGCM:ECDH+AES256:DH+AES256:ECDH+AES128:DH+AES:ECDH+3DES:DH+3DES:RSA+AESGCM:RSA+AES:RSA+3DES:!aNULL:!MD5:!DSS

ssl-default-bind-options no-sslv3

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

frontend localnodes

bind *:8181

mode http

default_backend nodes

backend nodes

mode http

balance roundrobin

option forwardfor

http-request set-header X-Forwarded-Port %[dst_port]

http-request add-header X-Forwarded-Proto https if { ssl_fc }

option httpchk GET /onos/ui/login.html

server web01 [onos-01 IP address]:8181 check

server web02 [onos-02 IP address]:8181 check

server web03 [onos-03 IP address]:8181 check

listen stats *:1936

stats enable

stats uri /

stats hide-version

stats auth someuser:password

3. Set url_path to point to the proxy server in Neutron ML2 ONOS mechanism driver configuration and restart Neutron.

# Configuration options for ONOS ML2 Mechanism driver [onos] # (StrOpt) ONOS ReST interface URL. This is a mandatory field. url_path = http://[proxy-server IP]:8181/onos/openstackswitching # (StrOpt) Username for authentication. This is a mandatory field. username = onos # (StrOpt) Password for authentication. This is a mandatory field. password = rocks

4. Stop one of the ONOS instance and check everything works fine.

$ onos-service $OC1 stop

Scale Out Nodes

Scale out compute or gateway node is easy. Just add the new node to the SONA network config and update the config to the ONOS-SONA.

CLI Commands

| Command | Usage | Description |

|---|---|---|

| openstack-nodes | openstack-nodes | Shows the list of compute and gateway nodes that registered to openstack node service |

| openstack-node-check | openstack-node-check [hosthame] | Shows the state of each node bootstrap steps |

| openstack-node-init | openstack-node-init [hostname] | Try to re-initialize a given node. It's no harm to re-initialize already in COMPLETE state. |